Regulating for algorithm accountability global trajectories, proposals and risks.

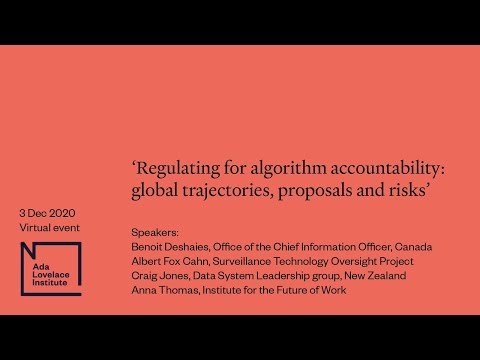

Okay we'll get going, hi everyone, my name is carly kind, and i'm the director of the ada lovelace institute. Um. Many of you will know who we are because you're attending our event and hopefully you've been to one before but we're a research institute. And deliberative, body based. In the uk. And we have a remit to ensure that data and ai work for people and society. And that means we, undertake, policy facing, research, to understand the societal, and ethical questions which arise. With the use of data-driven, technologies, and ai. We are really thrilled, to have an excellent panel here tonight and we are so grateful that you've all, joined us um. To attend. Even though i know. The, this time of year is, uh quite busy and probably. After quite a long difficult year it's taking its toll so great to see so many of you here. Um, a few, quick housekeeping. Matters. Um, uh, as usual and you'll be very familiar with this by now there's a q a function at the bottom of the screen, please put your questions in throughout, the presentations. And i will relay them to the panelists. After. When we move to open questions. You can also use the chat function, and just be aware that chat usually goes to all panelists and attendees. Um. We, invite you to engage with us, on twitter we're at ada lovelace, inst. And. Um. And you can also find a video of this tomorrow. Uh online. Which means we're recording, it right now, for your information. And, we're really pleased to have about 70. Attendees, in the audience, today, and a very international, panel so i will move ahead, and. Introduce, those people to you now. We are really happy to be co-hosting, this at the institute for the future of work and i'd like to welcome, its executive director anna thomas. Um we had hoped to have helen mountfield, here as well. Who was the chair of the institute for the future works, task force. Equality, task force, unfortunately, helen's had an emergency, at the last minute and has had to, um, to miss the event, that anna will um of course be presenting on the institute's, work. I'm also really happy to be joined, today, by, benoit, de gea, who is the acting director, for data and artificial intelligence, at the office of the chief information, officer. At the treasury board of canada. We also have craig jones who's the deputy chief executive, of stats, nz which is new zealand's, national statistics, body. And we also have albert fox khan who's executive director, of the surveillance, technology, oversight, project. Which is a us-based, organization. And albert was a participant, in the new york city automated decisions. Systems, task force. Which is a really interesting. Initiative that we're going to hear more about tonight.

So. As you can see. We're really taking an international, view this evening of what regulating, for algorithmic, accountability. Looks like and we're prompted to do that, um because of a number of events that have happened throughout the year, in particular, in the uk we've had a number of kind of high profile. Um. Disasters, might be one word but problems, with algorithmic, systems, in particular, the use of the, algorithmic. System to decide, um school results, that was deployed, by offcall, earlier this year, and that really was quite a pivotal, moment i think for talking about algorithm, accountability. At least in the uk. There have been of course other things happening such as a, long-running debate about facial recognition, and a court decision, by the court of appeal earlier this year, which spoke to how. What the obligations, are on police forces, who want to deploy, facial recognition, technology. Um and there have been a number of other high-profile. Instances, of public sector use of algorithms, and really, raising questions. Around, in particular, accountability. And transparency. And so i think there's very much a sense in the uk that we're moving towards. Answering the question, how do we put principles such as transparency, and accountability. Into practice. And there are a few different options on the table and the institute for the future of work has been one of the organizations, that have come forward to suggest. What they've called an accountability. For algorithms, act and i will speak further to that um, shortly. So we're really interested, in what is the best approach, to, regulating, for algorithm accountability, what can we learn from other countries, what is best practice, out there, what should we not do where are the mistakes, being made previously. And that's why we wanted to invite, this uh, excellent group of panelists, this evening. So. Um, i think we're we've gained, a few more attendees in the meantime so i think we're, at almost full capacity, now and we'll get going. I am, would be remiss, to not remind you if you would like to use closed caption, you can do so at the bottom of your screen by.

Pressing On the closed caption button. So i'm very pleased to invite festival, benoit. Who's um joining us from the canadian government, and benoit's going to speak a little bit about the algorithmic, impact assessment framework, in canada, and canada's experience with this nation. Thank you. Um so, the algorithmic, impact assessment. Is a tool under the directive, on automated, decision, making which i'll talk about first to set its context. And this directive. Was published, in april of 2019. So it's a fairly new instrument, in that regards. The directive, sets mandatory, requirements. For federal, government. Departments. Wanting to automate, service decisions, so this can include both the full automation. Or partial, automation, in the form of making recommendations. To officers. It applies to external, services. Uh it. Sets several requirements. That are mandatory, such as transparency. Requirements. So giving a notice, that decisions, are automated. Also, providing, a meaningful, explanation. After the decision, has been rendered to ensure that there's no black box algorithm, that's being used. There's a strong focus on quality assurance. There's regular testing and monitoring, which is required, we need to verify, data quality. Testing, the data to identify, its biases. There's employee, training, that's planned, consultation. With legal services. And, for some decisions. That are being more risky, there's human intervention. Checkpoints, so often, called the human in the loop elements. There is, also, a requirement, to provide recourse. Options. So if clients wants to challenge, the decisions, that have been made in an automated, fashion. And lastly. Again, once we deem that the risk of the algorithm, warrants, it there is a third, party evaluation. So peer review. That's required. So how do you do that how do you identify. Which systems, are expected, to have larger impacts or larger risks. Well that's where the algorithmic, impact assessment, or the aia. Comes from. The aia, is an online questionnaire. It helps assess the impact, that an algorithm, will have. Uh the result. From this aia, will be an impact level from level one for low impact to level four the highest, impact. Um, and we can think of you know level one could be campsite, allocation, by the parks. Authority. At level four you could have immigration, decision, imprisonment, decision those kinds of things that impact, people's liberty, well-being, and so on. And this will drive. This impact level how strict, the requirements. Of the directive, have to be so the directive, is written in a way that says for level one you need to do this for level two you need to do this, so we wanted the mitigation, measures to be proportional. To the risk of the algorithm. This is important, so you can balance. The need and the desire to innovate without being burdened by rules and procedures. While still having the rigor, that's required, when it's warranted. So. Proportionality. The aia. Is a completely. Open tool that's free to use, the source code, is on github. And many cities, countries. Corporations. Have used it already to evaluate, their ai projects. If we, look at the origins, of this directive, and the aia. Uh it's fair to say that's heavily, influenced, by administrative. Law so we don't make a secret of it it's written right in there. Um, in a way we think it translates, the long-standing, principles. Of transparency. Accountability. Procedural, fairness. And, explain, what that means in the digital, world so, what does it mean for an algorithm, to be transparent, and fair for example. The objectives. Of this, directive, and aia, are to reduce the risk to canadians, and federal institutions. To have decisions, that are more efficient. Accurate. Consistent, and also, interpretable. That the decisions, will comply, with the tests of procedural, fairness, and due process, requirements. That the, impacts, of algorithms, are assessed.

And The negative, outcomes. Where they could be present are reduced. And finally, transparency. About. Where we use this automation. We want to make sure that this is information, that's available to the public. So. It was released in april 2019. But really, it came into, force. I would say in april of this year because we had given departments, a full year. To get ready and it's only systems, built after, april, of 2020. That have to comply, with this. So, from this, uh we've been in cl in, discussion, with departments. And people having to comply, with this and, there's a few lessons i can share, and i'll share two of the main ones so the first. Uh, that surprised, some people is the level of collaboration. That was needed. To complete an algorithmic, impact assessment. And to just. Automate, decisions, in general. Um, building, software, has never been about just i.t it's always a collaboration, between it and business. But it's even more so for uh automated, decisions. Because to correctly, understand, the impacts, you need to talk to it developers. Privacy, experts. Legal advisors. Uh the program, owners that really understands the target population. And you also need to talk to the. Impacted, users. So completing, an algorithmic, impact assessment. Requires, a lot of different expertise, so you need a multi-disciplinary. Approach to this, um so that that was the first surprise, i think for some departments. The other one was that, this automation. Offered them, a unique opportunity. To look at their historical. Data look at their historical. Trends of human processes. And often for the first time. Take stock of what biases, may have been present, so it's not always a, pleasant discovery. Uh to do this but it's, often, a, good occasion, as you, like, collect the data put it together to train an ai system. It's a good, opportunity. To look for those and address, them. If we consider, some of the issues. Well i would say, uh on the whole. There's been quite a bit of research, about this directive. There's a few academic, paper on it, and on the whole i i'd say it's fairly well received, and it's applauded. Uh the. The measures, it proposes. Seem to be complete, but there's some questions. Around, the the scope of it so people wonder, what is excluded.

From It. And i think this may stem from a lack of clarity, of what it means to make or recommend. A decision. So, is alerting. A decision, maker, of a unusual, condition, is that making a decision, or recommendation. What it what about you know data matching, finding, uh information, in other sources, using fuzzy logic. To try to present that so is that making a recommendation. What about you know assigning, a risk, score to an individual. Or a prediction, or a classification. Is that, a recommendation. Or a decision. Um. Lastly, i think as you move toward that more the other end of the spectrum, so the system says you should choose this but a human, reviews and makes the final decision, everybody, sort of agrees okay now that's making a recommendation. But the earlier, ones, it's not as obvious, and we need to have the discussion, to educate. And to see where we take uh. To draw the line and, for the record all the examples, i gave previously. I would consider, them uh part of what's, making a recommendation. In an automated, fashion. So my team has some work to do to clarify, this. So. I realize, i'm uh talking. Quite a bit so i'll, close and summarize. Maybe, um, so. You know this event is about how can organizations. Be accountable, in deploying, algorithms. I think the directive, is a great inspiration. For that, and if i were to summarize, it you know what's the big principles, well the first one is transparency. About, where you're using it and how. The second one would be you need to provide an explanation. So, how was the decision, made, it shows that you understand, the system, and that it's fair at the same time if you provide this explanation, and everybody, can agree to it. Showing that you've done your due diligence. In identifying, the potential, negative, impact so that's where the aia, comes, comes in. Um, understand, the biases. And how you've addressed, them and now you've minimized, the potential. Negative impacts, of the system. And lastly. Uh it's always providing, a recourse, mechanism. To dispute, the decision, if you're uh not, happy with it. So with that i'll turn it back to you. Thank you that was that was super clear and helpful thank you, can i ask, one clarifying, question you mentioned there. You would consider this to be within remit but you mentioned risk scores, and is it the case that that type of risk scoring or predictive. Um. Uh. Uh predictive system is within, clearly within the remit of the, the. Um. The directive or it's still a fuzzy area. Well, you know if you ask me i'll say yes, uh, but i think there's a there's some uh maybe attenuating, factors, around that so it depends how that risk score is used. So for example, a lot of, audit, organizations. Will assign that risk score, then do a sort to get the highest risk at the top, and they'll say okay the top 10 will go and examine, them. By doing that, you've automated, the decision, of who you're going to examine, so clearly assigning a risk score in that situation. Yes. In other situations. It depends how it's used so, if, it has a strong, influence, on an officer, making a decision. Uh. Then i would say yes because there's factors, like automation, bias, where if you see a high risk score from the system you're like that's likely to sway your judgment, and affect it, so it's considered making a recommendation. That would be in scope so in most cases yes, but there's maybe scenarios, where the risk score. Would not have a bearing, on the decision, or it could be excluded. And is it the case that a regulator, or other government body has. Enforcement, powers with respect to non-compliant. Government departments, that is people who should have done an impact assessment but don't. Well with the uh, this directive, so we're the treasury board secretariat. And there's several mechanisms. That we use to assess compliance. Uh for example, we have what's called the management, accountability. Framework. So on a yearly basis, think of it as a survey. To departments. Where, we ask for evidence of compliance, with our policies. And it's across all policies. That we do this. Um so as part of that, assessment. We can review and engage with departments. There's also engagement, with our, big-it, projects, have to come, to, an architecture. Review. At the treasury board so as part of that engagement, if we notice the automation, component.

Will Educate, and we'll do outreach, to make sure that these activities, take place. Great that's that's really helpful thank you. There was a question in the uh q a about whether or not you might have a link to the directive, perhaps that's something you could put in the chat, um. If that would be possible sure yeah i'll do that great, great, okay let's um turn over, to the, other hemisphere, and ask for craig's reflections, on new zealand's, experience. A warm welcome to you all from. Bright and early in new zealand and it's quite precious, that. This conversation, is happening today actually as i was driving in. Associate. Professor, nessa lynch from our victoria, university of wellington's, released a report. On an investigation. International, reporting, investigation, looking at facial recognition. And. Recognizing, that new zealand police now have, um, the capability, in place for widespread, surveillance. Of, um. Of the new zealand population. They don't identify, any any. Evidence that's actually being misused, but. The key, um the key message coming through is that they just don't know because there's not a lot of transparency. And so i think that's a theme that you might hear a little bit coming through from the panelists, today and it's certainly. Something that's. Been quite. Um, prominent, in the discussions, that we've had as we've developed our um, uh algorithm, charter for, aotearoa, new zealand. Um, uh in some ways i kind of feel like this conversation's. Uh you know been. Being fairly slow and coming you know it's effectively, talking about the ethical use of. Augmented, decision-making, techniques. Um, by government, agencies, you know i think back to my undergraduate. Psychology. Lecturers, where ethics was drummed into you know 25, years ago it seems. Some, some professions. And, and and i want to draw on this because i think a lot of is actually about professional, practice, and in some professions. This sort of thinking is actually quite widespread, and has been for a very long time, born out of course and by the fact that we used to. Shock people in the you know 1950s. And so forth and psychology. Or you know train them to be prison guards and beat up on prisoners and so forth so, some of us have been on this journey for quite a long time but. I mean i guess it's just been accelerated, in government by the advancement, of the technologies, that we have available, now and, and in some sense regulation, being slow to catch up, so it's a good time to have the discussion, um for us. Uh. We. Um. We've developed an algorithm, charter for new zealand which is effectively, a um. An opt-in. Model for agencies, that can sign up to the the charter which. Binds them to certain practices, and in the use of. Algorithmic. Techniques. It came out of a report that we, conducted. In 2018, so our agency i should say for context. Is the national statistical, organization, but it also. Has been nominated, by our cabinet. As a role, um, to be the chief data steward, for the government data system which effectively, means. Working across government agencies. Um. Uh, to, to lift the quality of how we collect manage and use. Personal information. So it's a sort of leadership, role across government. Um, to, to make sure that we're behaving in a way that's. Consistent, with um. The expectations, of new zealanders among other things and as part of that work. Which was born out of some concerns, from, um. You know a couple of, um, uses of augmented, decision-making. Techniques, partly in our immigration.

System. And elsewhere. To look into how, government's, using these kinds of techniques. My conclusion, of that work which was conducted in 2018, is that. It's not actually as widespread, as what what i would actually hope, uh to be honest i. Uh i was a little underwhelmed, by the extent to which. Algorithms, were being used by government, agencies, to help in decision making. But what was really clear is that, there was really inconsistent. Use of um, of or inconsistent, way in which government agencies. Were sharing information about what they were doing so it wasn't really a great deal of transparency. Um. And and what was being done how data was being used what kinds of data goes into the algorithms, that are being used. And that that caused for me some concern, and, certainly for our government some concern, which. Was really the genesis, for this. Subsequent, work to develop. A charter, that that agencies. Could um. Sign up to which as i said before binds into certain. Certain, behaviors. To make sure there's transparency, and accountability, for how they are um how they're using algorithms. So. We released that in, uh may, or june of this year. Um, we we went through a. You know public consultation. Following the release of that initial algorithm, report. There was quite a lot of feedback about that. We developed the charter, which was, not not an insignificant, piece of work and quite a challenging piece of work to get across the line. And we now have 26, agencies. Um, signed up to that which is more than half of our public service departments, including all the big ones. That are big users of, uh of data so we're pretty pleased with who's. Signed up today including new zealand police i i should add. Which is really good in some senses it's a little bit too early to know how effective this has been you know we've only really released, it. Um. Five months ago, uh agencies, have been relatively, preoccupied. With other things that has to be said. During the course of this year so, um. So how effective, it's been, we're not quite sure. And the elements of the charter.

Are, Front and center. The the requirement to be transparent, about. About what agencies, are doing so. We expect that agencies, will who have signed up to the charter will. Release information, on their websites, about what, what algorithms. Are being used. Principle, of partnership. And that's particularly, important for us, uh in our context. And particularly, with our. Treaty of waitangi, partners, um those who don't know we sign this. Some time after europeans arrived in new zealand, in the 18th 19th century we signed a partnership. A treaty partnership with our indigenous. Population. Called the treaty of waitangi, in 1840. And it's really. A lot of our activity goes back to how do we honour the commitments, that were given under that under that, treaty relationship, and this that's particularly, important here because the decisions. That are made. As a consequence, of these sorts of um, of these sorts of techniques. Often. Disproportionately. Impact on maori. In new zealand who who are overrepresented. In justice and welfare, statistics, and and. On the wrong side of health and education. And a number of other indicators, as well so that, that partnership, particularly with our treaty partners is really critical to to. Understand, how. That's being thought about, in the use of these kinds of techniques. Ultimately, that. Thirdly, ultimately, algorithms, are about people, uh they're not about numbers and statistics. People. Are, ultimately. Impacted, by. The use of these sorts of techniques so we need to be cognitive. Of that. And. And signatories, need to need to reflect how. They are thinking about the impact, on people. Um fourth. That data is fit for purpose. So. You know that's thinking about data quality to make sure that um. You know data that's going into algorithms, is not. Um entrenching, biases, that might exist in the system. Um, and is actually, leading to fair and, transparent, decisions. Um the importance of privacy human rights and ethics, and um and and critically, sixth. And that there is human oversight, of these. Of these, um, techniques. As i said it's ultimately about people and and, in some senses, you know poor practices, where algorithms, are developed set and never revisited. Um which is context, and time changes, um that can lead to just. Um. Erosion, of what the original intent was if it's if it's not carefully maintained. So that's the kind of intent, of it. We released this as i said in the middle of the year. We actually received quite a lot of positive, uh. You know positive. Reviews, on it particularly internationally, actually there was some somewhat more criticism, internally, in new zealand. Um, and particularly. Um, for, you know to the extent to which it reflects. Uh the interests, of our treaty, partners, was one of the key pieces of feedback, that we got. Um. You know and and. It may not make a lot of sense, on the surface but what you need to understand, is that there are very different world views, and and systems of knowledge between. Western european, views of, knowledge heavily based in empirical, research and so forth and those kinds of forms of knowledge. And. What we call matadong, and maori which is the sort of indigenous, forms of knowledge so it's really important that, we as government agencies, find ways of blending these forms of knowledge and that was one of the criticisms, that came through is that this doesn't, accurately, reflect. Um some of those differences. Um various other. Criticisms, can and and things have been raised but i think it's probably too early to really reflect, on, whether, whether those.

Criticisms, Are actually valid and i think we just need to allow passage of time for this to bed and see what changes it's made, in terms of the behaviors, of government agencies. In the interest of time i might stop there and come back for questions. Later. That's really fascinating. Craig thank you so much and um, just, uh. Really wonderful to hear about the very unique, circumstance. Of um. In which new zealand's thinking about these issues. Although i suppose, given that canada, is also in the call you know there might be some uh it might be interesting to learn at some stage, how you're also trying to bridge. Uh those questions. Uh with the, indigenous, cultures, i'm wondering, craig, if there's if there's anything you can speak to about um. Uh, red lines around algorithmic, systems. Has there been any conversation, in new zealand about. How. The, you mentioned the police for example had signed up to the charter and i'm wondering whether, um, at this stage you're. Um. You see algorithmic, systems being used, across the justice system for example policing, with, it equally as you might see in other areas or is this, is there a sense that there are red lines or areas in which our grouping systems aren't being deployed in new zealand because of the charter or, or outside of it no i mean as i said i i'm i've actually been a little underwhelmed, although, you know i think transparency. Is um. One of the key things, uh you know one of the things that i don't know is whether as we went out to understand, what algorithms are being used whether people. You know we're not as transparent, as they they you know as we might expect them to be about what's actually being used. One of the difficulties, we've had actually is defining what algorithm is i know that sounds a little bit technical but it's actually been it was one of the challenges, and what we in the work that we were doing. You know if you take too broad a view of it every excel spreadsheet, you know is underpinned by algorithms, is that and we're not really talking about that we're talking about. Things that are high risk and potentially, innovative, that might have, disproportionate, impacts on people if they go wrong. We actually chose to go with a wide view you know wide definition, of what does constitute, an algorithm. And, to make sure that agencies, don't get bogged down and bureaucratic, read tapers that kind of document, every single. Regression, equation that they use um. You know we we kind of introduced this risk matrix, to make them you know to think about okay if it does go wrong what's the impact likely to be. And what is the likelihood, that something could go wrong through the use of um of algorithms, and so far that's been quite a useful heuristic, to help agencies, think about. Um, to think about what sorts of things might be subject to the to the charter, but, i think in our original algorithm, assessment, report we only identified. Um, about 35, or so. Um what we called operational, algorithms, so things that are being used.

In The operations, of government. Um. That might fit into categories, like i've just talked to kind of high risk ones, that ranges from things like you know risk assessments. For. Um. You know, in the justice system for people coming up for parole it's pretty widespread, internationally. Um through to you know bus route optimization. Algorithms, for the education, system, um. Uh to provide more efficient. Um, bus routes for you know you know for cost saving or more efficient transport, systems for the schools. So, um, uh there's no real i mean it's, it fairly sort of spread across, agencies. Um, and. My point of view who's a champion for the use of uh data for decision making, i'd like to see a lot more of it um. Uh safely used of course. That's great thank you so much craig. Let's send to albert, um. Who. Is. Both joining us as the executive director of the surveillance, technology, oversight, project but specifically. Um going to draw on his experience, participating. In the new york city automated decision, systems, task force, um which i think took place in, i want to say 2018, but i will tell us more about that so over to you albert. Yes thank you so much for having me and, um. Just to give a bit of uh background, here in the us, uh our regulatory, structure, for. Algorithmic. Decision, making, would be charitably, called a giant mass. We have a lack of clear guidance at the federal level, with residual, uh regulatory, authority, held. By the state and municipal, governments, especially, when it comes to the public sector use, of algorithmic. Decision making, and broader, automated, decision making. Systems, by, municipal, agencies. And so in 2017, originally. The city council. Began to address this issue here in new york responding, to the use of rather opaque. Proprietary, algorithmic, systems, such as compass. A. Score, system, used, for a. Pre-trial, release. Of individuals. Who have been arrested. And some other um. You know, scandalous, applications, we saw where, algorithmic, systems were uh, systematically, targeting, communities of color. And, so initially, the effort, sought to create. An open source requirement, for all automated, decision, systems, in, uh new york city that were used by a uh, municipal, agency. That later was transformed. Into the mandate, to create a city-wide, task force, which then was, uh formed and actually began uh. Doing this work, in 2018. And 2019.. Uh so while i was uh part of those conversations. Throughout and attended the meetings i was not a formal member of that task force, but, you know i was quite excited, uh initially, because. This was such a pressing issue it still is we have the opportunity, to create the first comprehensive. Regulatory. Response, to automated, decision systems. Uh anywhere, in the in the united states. And what i saw was that, promise, and that opportunity. Sadly, being systematically. Squandered. Such that at the end of the year and a half, um, you know we really, had not yet achieved consensus. On even the most rudimentary, components, of a regulatory. Uh regime. And so part of this was just the inability, to bridge the gap between civil society. And, public, sector. Entities, in approaching, the regulatory.

Framework. Uh, in particular. Um you know we saw strong pushback, from. Law enforcement, agencies. Uh who are. You know have long, been opposed, to that sort of transparency, when it comes to. Investigatory. Techniques. Such as facial recognition. And i should say you know this is all unfolding, as, these tools are really expanding, exponentially. And in the last three. Years here in new york city we've used facial recognition, more than 22, thousand times. In criminal investigations. Including, everything from graffiti, and shoplifting. To uh really a variety, of petty uh crimes. But we also see ads, becoming. Part of the fabric, of, education, healthcare, delivery, fire, fighting. And really it has become, a large, part. Of, how we deliver, municipal, services, here in new york. And, you know part of the concern, was. You know while, we did have, agreement, that machine learning systems should be subject to a heightened. Regulatory. Structure, of some sort, there wasn't consensus. About the use of some of the lower tech systems. Such as, the excel spreadsheets, that craig mentioned. I actually, am, of a slightly different mind when it comes to those sorts of systems. Because i do think that some of the most powerful. Forms of automation. In municipal, services, because of the slow uptick. Of these, newer cutting edge, machine learning models. Ends up being. The, the uh formula, hidden in an excel spreadsheet, that can, you know disproportionately. Impact over police communities, or underserved. Or deploy, resources. To, a lower income community and so we wanted, uh on the advocacy, side those systems, be part of the framework. We never were able to reach consensus, on that we were never able to reach uh, consensus. In part because. Sadly, the task force wasn't actually, able, to, gain information. About, how. New york city's abs, were operating. So during the course of the task force we repeatedly. Asked for. Just, access, to the data, on what ads, were being used how they were being used what safeguards, were in, place already. To at a bare minimum, have model, cards or some other. Sort of set of information. About, the status quo because. This is a very hard regulatory, space, under. The best of circumstances, but it's, almost impossible. To create. A meaningful, framework. Uh, when you don't have that, underlying, access, to. How, these tools are already, being deployed. Uh. Let alone trying to future proof, uh. Those systems that will be deployed, in the future, and that was another thing that you know, many task force, members spoke out against. Um. We actually had a shadow report. That i'll, include a link to uh in the chat later, that's put out by several task force members a number of civil society, groups that actually. Was. Uh, created, as a response, to the official report. Highlighting, its shortcomings, and putting forward, the alternative, framework that we hoped. Uh. Uh the city could have adopted, on its own, uh since the task force disbanded. Uh in, the end of last year, we had, uh an executive, order from mayor bill de blasio. Creating, an algorithmic. Uh management, policy, office. Which oversees. Uh the use of ads, by city agencies. Sadly, that has also appeared, to really languish, uh there's been, minimal, activity, with that agency, since it was announced, we haven't actually appointed. The uh individual. The officer, who's supposed to oversee, that, department, and and we really haven't seen any meaningful. Transparency. Uh about, existing, abs. Let alone. The regulations. We need to push back. Uh this is why we've seen an increased focus at least in the us on using, litigation. Under. Historic. Under, existing. Uh human rights and civil rights laws, as a way to try to get some. Uh regulatory, footing, as a way to try to push back against some of the more abusive. Ads, but uh we, we continue to think that we need, a comprehensive. Regulatory, structure we need that at the, uh city and state level, and eventually, we hope we'll see something similar at the federal level, uh and just because of time i'll, leave things there.

That Was great really concise, um history i wondered if i could ask a couple of follow-up questions. I wondered. What what lessons you would take from the process, of defining the framework, that you would. And perhaps this is in your report, and, please do please do like that so we cannot we can all read i think i i think i recall the one but um. What lessons would you take from the process by which um new york city went about, conducting, the task force that you think could have been done better and perhaps other countries other cities could do better next time, and then are there some like top three things from the actual content. Of the work that you, that you. You know that you called for as, in your shadow report that, should be top of mind. Yeah i think it really. With. Ads. We see such a democratic, accountability. Gap. That, we believe, that any, um. Structure, that we use, going forward, uh any task force any, uh some approach, has to be driven by civil society, by community members, by the public, and it should not be driven, by agencies, themselves. Because they have such a conflict, of interest in developing. These regulatory. Norms. And i just, i think that it, creates. This just. Huge, chasm. In uh. What, people are prioritizing. In developing. These new rules of the road for automated, decision, systems. Uh, in terms of the top level takeaways, uh from the shadow report, i think there's a real premium, being put on transparency. Uh one thing that's quite crucial to all this is just going beyond, algorithms. As well, and recognizing. That, training, data, other data sets that are being used by ads, these are all part of, what can create, bias, can. Fuel, uh you know uh errors, with ads. And so recognizing. That it uh simply having an open source requirement, or a third party auditing, requirement. Isn't enough and, we really need to create, uh new, mechanisms. There to inform the public of how ads, are making decisions, about their lives, and then redressability. Looking at how we can ensure that anyone, who is impacted. By an automated, decision, system. Has a meaningful, opportunity. To appeal. That decision, and any resulting, harm to a human decision maker. Without, having to, and having something. That is much more streamlined. And much more accessible, than the traditional, court system. Simply because, if. We force people to go all the way to filing litigation.

In Order to, indicate their rights against ads, it will. Make it uh such, a, you know, potent barrier for some of the most vulnerable, communities. Uh and really, allow. Um, dysfunctional, abs, to, continue. Yeah that's great thank you so much so there's a lot in there and we're getting a lot of questions in the q a so, um we'll come back i'm sure to the new york experience. Um to round us off i'm going to ask um anna the, director of the institute for the future of working out to speak and in particular to speak um from and about a really excellent report that they published recently and we'll make sure it goes in the chat as well, um. And i'll, let and anna tell you more about that. Um thanks so much uh carly. Um and for inviting, us to co-host, this. Um, amazing event. Um, i would like to talk. About. Our, work, in the equality, task force. And our recent proposal, for, accountability, for algorithms, act. We established. A cross-disciplinary. Task, force. About a year ago. Of technologists. Academics, lawyers, business, and unions. Um, we, resumed, to zoom in, on how, automated, technologies. And ai, were used. At work. Um in some really sort of key areas. And to look at the opportunities, and challenges, arising, from the use. Uh, their use there and how to resolve them so we've very much come from a work perspective. And we are um i should have said a charity, with a mission to shape a future. Of, better work. Um on as our methodology. We designed. Up, three quite detailed, case studies. Covering three high-stakes. Areas. Um in which we've seen, um acceleration. Actually through. And hiring, management, and performance. And the case studies were hypothetical. But we use real research. And, other material, in the public domain. And also the expertise. Of the of the group, to make sure that they really, reflected. Real. Uses, and real experiences. And also to make sure we could get past some of those kind of transparency, and access problems, that you've all, talked to. Um. So against that background we pulled out the challenges, and the themes. And tested, the main legal frameworks, in the uk. Uh for accountability. And identified, goals, and steps we thought needed to be taken, to get there, and that did take us to the need for sort of fresh, legislation. Um. Um. As i've said we focus on work and a very sharp experience, of work. Um but, i think that uh what we have found in that our propositions, and, trends. May shed some light on similar, problems, that, uh, outside, work too. Um. If i can share, the some of those, main propositions, and themes that underpin, the proposal, first. Um, i think that you'll spot similarities. In them, in all of your experiences. And that you've, spoken, to. And the first. Proposition. Is that although. Human roles are often obscured. Data-driven, technologies, are very much the product, of human decisions and when you go into the woods of our case studies, you see really clearly. That it's humans, that are setting the agenda, setting the perimeters. Defining, the problem. Um, uh selecting, the train, training data set, some variables, and fitting that technical system into the wider, decision, making. System. Um. And those decisions are the ones that really count. Um the albeit, that they are diffuse, and often they're hidden behind a language, of. Technology. Or statistics. And spread out across multiple roles and organizations. Um even with machine learning. Um that it's an aggregation. Of algorithms. To achieve, an outcome set by an initial, algorithm, designed, very much, by humans, in this way.

And That's something, that we feel is, sometimes, lost in some of the. Some some of the some of the wider debates. Um and, that part of our task. Is to recognize, this and to reaffirm. Uh human agency. And the second proposition, that we came to. Um. Through looking at the case studies really closely. Which is really more debunking. Myth and a proposition. Is that algorithmic. Systems, and data, and the data that feed them. Um. Are not, neutral. The, the systems that we've looked at, are trained, on data sets, that capture, patterns, and relationships. That reflect, past access. To resources, and past choices. And for example. In a work context. Access to work, pay. Shifts. Um. Again you can see i think that really clearly in a work context. So by their nature, they will encode. Past patterns of inequality, or privilege, or disadvantage. And without, intervention, they will learn from them, those will be. Replicated, and compounded. And, and, projected, into the future, which is a big problem. Um, given the huge, scale, and pace at which these, data driven systems can work. And given that they stretch, across traditional, boundaries. Between work and home. And the dimensions, of data are increasing, too, this is an extra big problem. Um, and also, because in a sense the systems are meant to discriminate. They're designed, to be finding patterns and similarities, between people and groups of people. And for any former employment lawyer at least that's a big red flag. Another, point here. Before the final proposition. And the way the systems, work, and the way they are used. Means that we're moving from an assessment, of what a worker can do what a person can actually do, to a prediction. Of who a worker, is, and what, she or he is likely to do. Um, the third proposition. Um is information, asymmetry. So this is already really pronounced, at work. Um but we know we've seen clearly through the cases, again that use of data-driven, systems, exacerbate. This, as the system simultaneously. Seek more information. And give less information, about what they're actually doing. And, i won't go through our polling and information but it's in the report perhaps we can put it on the chat and you can, have a look at that. And so against that background, that big background. We looked at the law really closely, our full analysis, is in our report and i won't, um, trouble you with with that but do do have a quick look at it, but and the findings. In terms of the the, way the law works are, are. Are in summary, these. That individual. Rights and remedies. Are not sufficient, what we've all really been talking about. Um is structural, intersection, inequalities. Which can result. In collective, harms. On a mass scale, which can be, projected, into the future. As they are amplified. Um. And so our case studies, felt in our sort of looking at the legal analysis. Is that relying on x post-facto. Individual. Claims. Isn't enough, it's not possible to even detect, it it's too late. And therefore a legal framework. Founded, on that based really, on individual, rights. Um. Won't ensure. That these, problems are identified, and tackled. And that the systems, are well designed. Um. I'm going to skip out the next problem because i can see the time, the next, um. And move on to, the fact that there are multiple, forms of unfair, discrimination. Um, that they're not all caught by protected, characteristics. I know legislation, varies across the country because it's. Across the world. Um but, um. Some of these are benign like what's your favorite color, but some will be about your postcode, and your voice, and therefore being wedded to particular, axes, of discrimination. Is not always. The best approach, that's not so it isn't hugely, important. In identifying, and solving the issues that we're seeing. So, we've seen that the current law has limits. We've seen and identified. Significant, disconnects. And contradictions. Um both within, and between the legal regimes. And really what we're talking about is a kind of intersection. Um in in the uk at least, between. An equality. An employment, regime. Um and a data protection, regime. It sits between, them. Rather than being wedded to either or dealt with by either. Um. We've seen powers of enforcement, we have those in the uk but we, but plainly there there are they are that you will you will experience them too. Um they're also power, power problem with powers, and and expertise.

You Need, as bernard, said across disciplinary, expertise. You need powers, also including, powers to investigate. And to set standards. And so against all that big background. We've come up with a proposal, which is outlined only leaves a lot more work and development. And would love to know what you all think about it, which is a principles-based. Overarching. Framework. Which straddles, the innovation, cycle, and sectors, although of course we do need know, that sectors will need, um, specific. Guidance, in addition to that. Um it combines. Forms of self-regulation. And, new duties. Outstanding. Among the new duties. Our new corporate, duties. Um and in particular. An algorithmic, impact assessment. And within that we flag equality, because that's the thing that we've come into is particularly neglected, absolutely, important. Um in this scenario. Um so the duty, is to embed, a sort of systematic. And dynamic. Duty. To uh just at the earliest, possible stage of the university. Cycle. To, assess, this. Evaluate. It and then make reasonable, adjustments, for it, um. And against that background, we know that needs more work in terms of identifying, because precise, remit and thresholds. I'm sure there are some lessons that we could learn from you. But that's a, but as a basis. Um we think that's a good place for us to start. And a good place to start a wider conversation. As albert has said. With civil society, and other stakeholders, involved in it, we also make other. Mother recommendations. In particular on transparency. And. Powers for the regulators. And across the regulatory. Forum which we've proposed. And but i think kalyan better stop there. That's that's. Excellent anna thank you so much i might just pick up on one um. Uh, thing you just said towards the end and maybe turn it back to benoit if that's okay you talked about a corporate duty. Um, uh kind of imposing, this, duty at the corporate level and i wondered benway if you could, it's a question i have that had um sprung up when you were speaking which is. The the obligation, in the canadian, instance is around, public sector bodies procuring. Um technology. We had a number of questions in the chat about this idea of opening up a black box, and. The explainability. Of systems. And, to what extent that conflicts, with, corporate, um claims around intellectual, property, and. Kind of trade secrets. I'm not sure the extent to which the institute for the future of work went into this as a challenge to that idea of a corporate duty but i wondered benoit if that was something that you had faced. In your work that is when public sector bodies. Are procuring systems from private sector companies what is what what is the relationship, between the two in in fulfilling, the algorithmic, impact assessment, and what obligation, is there on, government to really get under the hood of the system that they're procuring. Sure, so, the directive. First of all if the system is built in-house, which is the case for a lot of systems, in the canadian, government. Uh the source code is to be released, but as a transparency. Measure i'm not sure it's really, an effective, measure to the average citizen. What good is a pile of source code, uh you're not equipped to, you know understand, it, so. I pre, you know i, i like other types of uh, transparency. Where it's in plain english, what is the system, what data is it using. How is it using it, uh those types of discussions, i think is is more useful, than the uh, the source code that said for, the link with private sector, so there's a lot of cases, where you may uh prepare. Systems, to to help you do. To your systems. Um. And in that case, we require, access, to the source code so that's something, you need to negotiate. Um. To be able to have access to the systems and all its versions, because somebody, could come you know six months down the road and want to contest, the decision. So as part of the contract, we require. Access, to all the components, of the system. Basically. Uh since, it was first deployed. So that you can, re-verify. A certain decision, recreate, the conditions.

And Truly understand, it, um, so i'm not sure if that explains, it or answers it yeah, that that's really helpful, thank you. Um, uh, so we've got a. Scant, nine minutes remaining, and a lot of questions in the chat um a couple of things that i wanted to pick up on, one, is i'm interested. Craig, and i want to connect this to albert's experience. In. How you build. Public trust in the use of. Automated, decision, making systems. And, um, it it. You kind of hinted at some of the critique that the new zealand government had received on the charter about in particular, engagement with maori, communities, and i wondered if, that's. Impacted your thinking going forward about how to better build trust with those communities. And i also wanted to ask albert you know what would it take. Do you think in the us context or in the new york context, to rebuild, that trust which feels like it might have been lost through about the run process, like what would it take to start to build more trust in. Um, in, public sector use of the these systems or these technologies, so craig and then albert. Look it's a really good question. Um, i don't think we've lost the public trust i should start, in that, um, by saying that, i think that there's a risk, it's a risk rather than an issue, um. I think, um i mean ultimately i think that transparency. Is is a key part of it that can help or hinder their trust. Depending on what we're doing with them, but for us particularly, with that question, of, maori. It's about how you enable. Greater participation. For maori and i think this could apply, to, all communities, really. How do you enable, much greater participation. Um and decision making and how these things being used so. So for our context. Um, you know we're engaged in another process. Uh with maori. In a kind of data governance, conversation. Where, you know maori have largely been shut out of the government data system. Decisions, being made about. And for maori without any real involvement, of maori communities, in those decisions. And and i think that's a major problem for us um. I think that does erode, trust, and, we're trying to find a way that enables, much greater participation. In decision-making. And. How government, is collecting managing and using information, that's relevant for maori to make good decisions, to further their own development. Algorithms, fit into that. It's it's a part of that ecosystem, if you like but it's a wider discussion, that we're having. Um and i know canada, has had you know it's very similar discussions, as well it's quite a. A pertinent discussion for a number of countries as well as australia, and others. Um, and and to me that's how trust is maintained. Is enabling, that and it's not just advice, or you know. You know transmission. Of information, one way from government, to, um communities. It's actually genuine decision making. Uh which is really challenging, that's actually really hard you know in my. My world, um. You know i'm, the i'm a deputy chief executive in the national statistics, organization, there's legislation. That, protects the independence, of the government statistician. And it's there for a reason. To make sure the government statistician. Can say things that might be politically, unpopular. So, um that, independence, is kind of you know uh.

Uh, Is there and and operates in the legislation, so how do you enable co-governance. And and, you know co-governance. And decision-making. With community members about how this how these things are done. Can be quite challenging, um so it's sort of easy to say and hard to do, but i think ultimately, that's for us how we're thinking about how to build and maintain, trust and confidence. Particularly, with our treaty partners. I think i think it's a very current conversation. In particular in the data governance, space isn't it which is like how do you build participatory. Mechanisms, for data governance i'd love to follow more what's happening in new zealand, i think. In the context of work as well anna there is this live conversation, about how do you involve workers, in a conversation, about, how their data is used including by their employers, and and make that a more participatory. Process, albert did you want to come in on that question as well. Yeah because i think this is a crucial question but i think it's a second order question. And first we have to ask, should the public trust the ads, that are being used by government, i think resoundingly. Unfortunately, the answer in the us is no, because we're using, you know criminal justice algorithms, have been demonstrated, to discriminate, against communities, of color against women against. Non-binary. Individuals, we're continuing, to use. Uh, algorithms, that are, you know hidden, by trade secrets protections, one thing i want to flag. At the national, level is we have legislation. Called the justice and forensic, algorithms, act, which would require. Any vendor, that is using, its, technology, in the criminal justice process, to wait, wave trade secret. Protection. Uh as part of that criminal proceeding, and that uh. Is, from our perspective that's our transparency, is a bare minimum. But i also think that um, you know, another, example is currently there's a bill pending for the city council here in new york that would address. Algorithmic, discrimination. Hiring and employment. And in that measure we've been doing quite a bit of activism.

Trying To ensure. That we're including, uh. That we're including, workers, as part of those conversations. That we're giving, organized, labor an opportunity. To. Audit these algorithms, as well but also, because of the nature of the american. Legal system. Really, focusing, on the ability, of the private bar, to. Bring. Litigation. On behalf of those whose rights have been violated. Because we just don't trust, that, a public sector focused regulatory. Approach that depends, on. Administrative. Agencies. Allocating, the budget necessary. To actually. Police, these algorithms. Is going to be sufficient. Because, you know, we're at a point where. You know a lot of these, agencies, are struggling, to keep up, with how of, these, algorithms. Operate. Let alone create an actual enforcement, regime, so we think that you know. Just given the limitations. Uh especially, in municipal, enforcement. That we're going to have to. Use, more private sector litigation. Civil rights litigation. Uh just as we have, in, uh fighting, uh human, discrimination. Yeah even though that comes with those limitations, which you yourself pointed out and anna did as well around access to justice, and the problem with uh. Exposed. Uh, remedies. I mean i think your point is a really good one and it would be a great one to close on which is you're entirely right to say that it's not about building public trust but about building trustworthiness. Of these systems. And and making the case for why they should, be trusted, in, and i think that that is really the ambition, it seems to me of some of these frameworks. Such as algorithmic, impact assessment which is, ensuring that the system's just trustworthy, to begin with. Um, i i'm afraid we'll have to to end it there there's a lot of questions and conversation. Still happening, in the chat and hannah if you don't mind downloading, the chat before, we close. So we can follow up on anything that might be remaining, in there that would be great. Thank you all. So much to our panelists we really appreciate your time and thank you for engaging with us we've learned a lot. And, i hope the audience has too and uh this will be available, in a recording, hopefully by tomorrow, you can find that um via our twitter account and on our youtube page. Have a great, morning, evening, afternoon, all of you and thanks again for your, time. See you. Later.

2020-12-07 04:22