Generative AI: Finance and Economics Spotlight Session

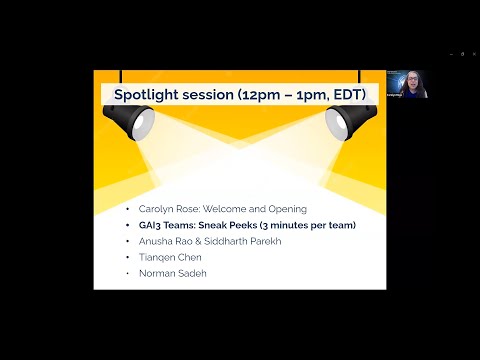

okay welcome everyone to our Spotlight session on finance and economics the third of three hackathons for the generative AI Innovation incubator we have an exciting lineup for you today uh so first of all um you will probably want to check out our team websites uh you can find them on GitHub and as soon as I am not sharing my screen I will go ahead and copy these links into the chat so that you'll be able to get to those um so I'm Carolyn Rose and I'm doing the open and welcome um we will have a sneak peek from each of our hackathon teams three minutes per team and then we will have three Spotlight talks a 10-minute talk from um uh one of our gai three interns Anusha Rao and an undergraduate interns to Darth Park they're going to be talking about some of the great work they've been doing recently in the area of finance and large language models and then we have two faculty Spotlight talks one from Tian Chen uh Tian Chen Chen and Norman Sade and we're very excited to have them here um and I think that they will be very inspiring and um mature views of AI from uh all of the work that they have been doing um so with that I um we'll uh very soon turn it over to our teams but I want to make a couple of announcements one of them is that tomorrow will be the judging event for our teams so today you get to see a a sneak pre at Peak but uh tomorrow you can actually weigh in on the judging of the final projects so the teams will be working very hard for the rest of the day and tomorrow morning and then and they'll be presenting their progress and what they've learned and in some ways I think the Lessons Learned are the most valuable contribution to the community as part of our ongoing discussions in the Dai 3. um and then there will be a deliberation of judges and and um and so some expert judges will vote alongside of the average of the whole Community uh vote that counts as one person and um then a winning team will be selected and announced then next Friday will be the closing event of our summer series we will have a closing keynote by Jamie teevin from Microsoft then we'll have the presentation from each of the three winning teams one from each hackathon and then our closing keynote will be from Mona diab who's the new LTI department head so I started out this summer series as the interim department head of LTI and now I'm very happy to have stepped down from that and welcomed in our new department head Mona diab but dni3 will continue we plan to have a monthly online talk series and other kinds of things there will be a small break in the beginning of fall but then we'll be kicking off again um and uh I'll finish off the session with a few more announcements but for now I think we will start with our team presentations and the first team that we'll be presenting now is Chad bi chat hi can you go ahead and share your screen okay and you have three minutes and I'm starting the timer now um hello everybody this is presenting for chat bi and yeah we believe outcomes is a combination of insights and automations um so the problem we are trying to solve and this is our team we are a team of four with a background in data science and engineering businesses don't want more data they want outcomes and business intelligence tools today enable reporting which is a great first step but what we are really looking to enable the generative AI is the few insights and action items that the business needs to focus on today or this month or this card or to truly move their business forward but it makes sense we want to enable q a capabilities we want to bring together data and we want to the the Empower users with everybody you take actions and automate workflows it's about the solution we are a vertically integrated insights and automation product and we are focused on small and medium-sized businesses to begin with why this customer focus we care about technology but we care more about our customers uh smaller medium-sized businesses are often ignored when it comes to getting the latest tech so we want to change that and we also believe that by empowering smbs we can empower the whole economy because it's got a very uh very strong effect there and why generative AI we we believe that business is at different scale need different kinds of insights and automations having genetics SQL based dashboards just don't work for everybody and AI has the ability to provide bring in human when needed but automate workflows and we think we can take this close to the place where gen AI is the brain of the business this is a user Persona this is Tom jeps who runs a business with his wife Janice and they own a general contractor business and they have 12 subcontractors they have slightly different usage patterns uh Janice handles all the business side of it Thomas on the field so our proposition is we need insights to be as simple as this you know jobs number of contractors that are busy ability to increase Revenue Etc so you know these these are some of the examples of our insights you know you have three jobs this is an operational Insight you can have a revenue inside we can have a cash flow inside and we can have opportunities and more to and we can add more but the whole point is give them exactly what they need to see enable them a way to act on it and move forward yeah that was oh and then the proof points is you know Shopify recently alongside click AI which is an AI assistant for e-commerce business owners uh Microsoft power bi now has insights a bakery to their platform for beta what's unique about us we are Tech enabled but we are focused on verticals and customers and we believe that by fine-tuning our model and keeping the ux really simple we can meet our user needs thank you very much that was great okay and now we will have our second presentation um so I will call up sustainable investor AI thank you Carolyn great all right so uh hi folks my name is anshul Madan we are team sustainable investor AI uh these are my teammates so we are building a AI advisor for investors with a focus on environmental social and governance Investments by combining analysis across traditional Financial factors Revenue growth profitability and sustainability metrics example carbon footprint and social impact 86 percent of Millennials indicate they consider ESG as an important investment criteria and investors recognize the impact of environmental and social risks on financial performance as can be seen by the quantity of global assets managed by uh manage according to sustainability investment strategies investors value sustainability information but complain about data quality greenwashing which is the use of misleading labels and advertising to create Just an Illusion of environmental responsibilities and another thing they complain about is the consistency and reliability Financial research is time consuming and labor intensive as the volume and complexity of data presence significant challenges for manual analysis so our solution is to build a reliable investment advisor for EST Investments by providing Financial advice in the natural language interface along with the measurement of the trustworthiness by making it easy to analyze reports and detect brainwashing we are helping the users make informed investment decisions our end goal is to leverage ni to summarize entire Financial research including 10ks but our Focus for the hackathon is sustainability reports come back tomorrow for the final presentation and my colleague ijon is going to talk about how we use standards to detect greenwashing here are some results so far it is important to choose the llm model per the use case we chose Core 2 because of its 100K token limit and uh support for large sustainability reports which we are using for analyzing thus operating the need for external uh long-term memory like vector databases we also realized the need for prompt guidelines to accurately quantify trustability of data sources referring to the standards we are developing detailed uh uh questions sub questions to get clarity on the requirement and quantify possibility and for Reliable analysis of the reports we do not simply pass the user questions to The Prompt we need the we need to develop three prompts to specify uh how the responses need to adhere to the standards as per the requirements example only use metrics defined in the standards and not companies self-defined big metrics uh we also have some other planned results like measuring Effectiveness using prompt guidelines and different uh prompting strategies example a few short prompting uh I look forward to see you at our session tomorrow thank you hey thanks so much okay and now our next team is trust sense hi this is okay and go ahead and get started uh hello everyone so our team is trust Sons my name is kaushik and we have Sai pranava Jahan Ian James everyone from different disciplines uh to give you again a little background a recap cyber cyber crime has become a very big problem and it's getting a lot worse a Cyber attack occurs roughly once every 39 seconds and more than 800 000 people have fallen victim to these cyber attacks each year and to give you an example of how generative a can Aid these attackers in crafting convincing phishing emails to scam someone or create fraudulent fine uh emails related to finance uh here is an example when we asked uh to generate such an email and also it will give different techniques that are used by these people to create these malicious content and this is an overview of our solution what we are trying to build for this hackathon um so mostly our loyalty here is the explanation module that we are using in our architecture which explains the decision taken by the uh trained model it will tell why the model had to take a such a decision whether it is fake or real and we are also generating a report which will allow users to uh take a decision or to what on what extent they could like trust the decision made by the model and we are we are focusing on identifying the trust and sincerity level of information that is encountered online by the users we analyze message content tone style and also other data and detect potential vectors of attacks and helps uh help users make informed decisions so so far uh we were able to create synthetic data using chat GPT and also we uh we found uh some data sets online which are kind of relevant to what we are working on related to fraudulent and phishing emails and also Twitter bot data and we also began testing llm based models right now we are playing with llama one uh we were able to right now we are doing it more in a zero short way by just using prompts and seeing if it is able to detect it on its own uh we would also like to fine tune the model and we also will be building this Google Chrome extension that we talked about uh which will make the tool more accessible to the end users thank you okay and our next team is Taxman hey guys one second let me share the screen hey guys we are team Taxman uh uh we are Abdullah me and hanif based across Pittsburgh across all my major disciplines and we are working on the issue of taxes something which everyone is pretty knowledgeable about but most of the times they are unsure as to what happens how it works out and first place we're trying to understand if it's a pertinent problem so Advent of AI or generative AI in the field of taxes has fueled a lot of faster and better tax experiences for taxpayers and we want to fit in one of those aspects of like how you can help uh how you can get help from generative AI in trying to understand lexicon about taxes and in general how to fill out forms which are related to specific taxes our solution is an easy to use platform for filing taxes and filling out forms specifically for the duration of hackathon we are focusing on a form 1040 and the tool will help you understand tax lexicon terminology and some of these really weird term which are which most of the people don't understand for example unattributed income from other sources I could never understand what it is the Genesis of that idea came from uh me last semester in Spring trying to really struggle in understanding how these things work and if I could have had one help because I ended up Googling almost everything on the platform or on the Forum itself so our tool will help us understand will help you understand some of the terms which are not which which are pretty common in the tax forms which you would which you might not know and it will take an input for example your form W2 and fill in the tax details for you how are we trying to do this so it's based on three main interfaces one is llama uh fine-tuned Largent model which will help you navigate through form W2 the other is OCR which is uh object is called as optical character recognition it's just a way in which computer reads text and it generates information from it and all of it is combined in easy to use UI for any time while fill in the form if you are unsure about anything what do what does capital gains means how do I use that the tool will help you in understanding that and then point towards the place where you might be able to get that information and what would be the next steps essentially then after the hackathon like we mentioned uh we processed form W2 in the 1040 so 1040 in itself contains more than 50 forms and schedules all of which are listed in a complete tax code our next steps would be to incorporate all of those forms so that it reaches a point where you can just upload stuff onto the platform and it fills it out for you another step would be tuning the OCR model so that it can identify information for filling out the form correctly and then understand this is just being done for one category which is individual individual income tax forms one subdivision and like this there exists 10 more categories which fall under individual and then so and then similarly there exists a completely different category for business which is a corporate business tax so the next logical section I'm gonna have to cut you off now um but thank you so much yeah okay and the final team is team ITA are you ready with your presentation uh yeah sure okay um okay and go ahead and start sounds good uh hi everyone uh we are team project I thought and uh let's go ahead um so this is uh who we are uh and how I'm currently a software engineer at JPMorgan I work at firm before graduated from CMU on 2016. and we also have fun phone uh she's currently risk manager at Market funding ex-people and graduated from Duke and we also have DJ his character engineering manager at PayPal um he got a PhD from Ohio State University so what we are working on is uh uh when you navigating AI or most generative AI to automate the data pipelines so we call it like ETL process so basically data engineering is a really cost uh substitial companies on whole budgets so we want to reduce this as much as possible to automate the data onboarding injection and migration process uh it will also are going to strategy to leverage AI for the testing validations monitoring of the observing the data to ensure the safety of the Pipelines um so basically the core idea in this hexane is will want to automate the process with snowflake so something like a user input data like hi itel can you pull the transaction data and show top 10 merchants or can you run this query on snowflake or can you give me some like database or table example for me so I change contains this property into instructions and actually this task and called the external snowflake API and then it will return the result to the user so this is like a typical goal uh we have front-end backhand we're also working on uh to fine-tuning the model served on the backend language chain and to uh give a better user experience towards like modern homes and Nations and yeah I want to give maybe like a quick shot demo on what we are having now uh so I put up this uh like a basic UI and then I could say hey uh connect to to snowflake so the agent will try to connect the snowflake oh it is satellite is connected and I can ask what database do I have in Snowflake so it can look into the information we need with the like this prompt and find like four or five like a demo databases and I can also ask what schemas okay how I'm gonna have to cut you off now but thank you so much and it's very impressive that you have a demo already thank you so those were our teams and now we will have a spotlight talk a short Spotlight talk from interns Anusha Rao and uh Siddharth Park just go right ahead yeah uh is my skin visible yes please go ahead uh yeah so hi everyone I'm Anusha Rao a graduate student at CNU I am currently pursuing my masters in computational data science and my teammate is Siddharth Parikh and uh undergraduate student at CMU so together we present our work on finkua that tests the numerical reasoning capabilities in large language models so as we all know that there has been a considerable excitement within the community regarding Anusha your site didn't uh your side oh there sorry it there's just a lag please go ahead okay yeah uh there's been a considerable excitement in the community regarding large language models and their potential achievements uh chat Deputy has now become a new standard and uh with the release of gpt4 it is marked the Pinnacle of innovation in natural language processing however we should ask ourselves two important questions one among them is that open source models like llama uh have comparable results with that of open AI models and the second one is that is few shot prompting a viable solution for enhancing all capabilities in large language models so let's answer these questions through our experiments on exploring numerical reasoning capabilities in llms so as intelligence continues to advance there's definitely a need to establish more robust benchmarks and one such Benchmark is a finqa that is designed particularly for finance so a typical thank you examples looks as follows uh it consists of a corpus that has both textual and tabular information uh it has a question that needs to be fed into the model and it also expects a quantitative answer that can be derived using a series of operations so we tried out zero short Chain of Thought prompting on GB 3.5 uh while the

model successfully extracted the details from the text it struggled to understand the underlying language that is in this particular case I did not understand the meaning of weighted average uh so the operations that carried out did not uh lead out to a successful answer so in addition to this the model also failed to capture the details outlined in the table because of the extensive length of text so what exactly was the problem here why is that financial data harder than normal text uh so unlike regular textual data financial data mostly comprises of numbers and then it involves computation from tabular content so a recent study revealed that uh current word embedding straight numbers as ordinary words and this results in models capturing a rough sensitive magnitude but they fail to grasp exact numerical significance so therefore we need to design and indicate architecture that would help us understand the context better let's now look at our architecture uh so the finqa data set comprises of approximately 8 000 question answer pests so we will feed in the Corpus along with the question into the retriever model uh this consists of the MP net base model which will be which I'll be talking about later in detail uh and then we use cosine similarity to extract top and most important sentences in our case top five uh that will be fed into the generator as input so the generator is a core T5 plus model which we'll be talking about in detail uh this generates a domain specific language to solve the problem and then this is evaluated to produce a mathematical input output as the answer so let's delve deeper into the retriever architecture now uh with my initial experiments I started with llama one I started fine-tuning the 7 billion model initially and then went on up to 30 billion model however this pipeline did not really give me uh the expected output it did not uh enhance the uh results from base uh Baseline finqa model so I searched for a model which was particularly uh designed for retrieval uh and I found epinet base to be the most appropriate one the uniqueness of this model was that the model uh solves the problems with both Mass language modeling and permitted language modeling so it introduces dependency among predicted tokens uh that's solving mlm's problem in birth on the other hand it also overcomes position discrepancy by incorporating auxiliary position information which says the plm's problem in xlnet so here are the fine-tuned results that we obtained from our retriever uh comparing it to the gold index results we see that two out of the three sentences that we retrieved are important for our generation and hence we obtained the accuracy of 89.73 while retrieving top three results and 93.5 percent while retrieving top five results yeah moving on to the generator so once we receive the outputs from the retriever we encode them using a pre-trained encoder in our case we're using Code T5 plus which was quite recently released uh along with the embeddings for the special tokens from the domain specific language which is the operations and then the parentheses and so on we then pass these on to a custom lstm decoder with at each time step generates a part of the program and it updates the memory to include any evaluation of the previous step until a complete program is generated with this it has the slide change for everyone okay yeah with respect to the results so we have two metrics that we evaluate this on uh program accuracy and execution accuracy so execution accuracy is more lenient and allows for different programs that might reach the same answer but Chance are there is some alternative form of the program whereas program accuracy checks for whether the generated program is identical to the Target program so having created two models one on the results we obtained from the Retriever and another on the golden retrievals from the fincube data set we see that in both cases we beat General Cloud performance by a significant margin but at the same time we're also quite far from expert performance which is likely there is a of knowledge gained from being in the field fairs uh clearly we need further research into this uh and alternative approach that we are currently testing is instead of using an lstm decoder we use code T5 plus uh as a complete text to text generator which would hopefully take advantage of the models code generation and understanding capabilities since it's trained on a corpus containing largely of just code uh so now let's compare our results with GPT 3.5 uh we chose a sample of 25 sentences that were representative enough of the Corpus and we found that GPD 3.5 without Chain of Thought

prompting gives us approximately 48 and with chain of third prompting that is asking the model to um generate a step-by-step uh upon giving a wrong answer the model achieves a 56 accuracy which is definitely a Improvement and finally our finqa model that we designed achieved 72 percent of the uh 72 accuracy beating all of the chart GPT 3.5 models this signifies that there is definitely a need to have a complex architecture which has uh better understanding of the domain than prompting uh so yeah we've made made a couple of observations throughout our experiments and here are a few of them uh the first one was that uh most of the successful operations are related to average net change or growth rate uh the second one was that changing the word from portion to percentage does give us improvements so this shows that the model is aware of certain kinds of popularly used words but it fails when uh we use a synonym of that word which is not so commonly used another strange yet interesting and uh um in observation was that when we use the word cache it seems to have confused the model this might be because Financial texts don't really uh depend on the mode of payment and this does not really change the answer but this seems to have affected the model in some way uh and finally uh despite all our efforts uh domain specific words like that comments or capital expenditure are still hard for the model to learn uh these are the terms which are learned by Financial professionals over time and are rarely explained in financial Corpus as well that is why our model is not able to perform that well on these words so finally uh this leaves us some room of improvement for our future works and while our experiments with llama one didn't turn out to be expected we uh feel that llama2 is much more popular because uh we've tried out zero short prompting and few short prompting techniques and it was promising than llama one and hence we would want to try this out and in addition to that uh a paper by armina under Professor Rose uh has um uh introduced a novel addition to the generator architecture uh which adjusts the attention weights of the model uh based on the alignment of input to that of the output so we believe that combining these two architectures will help us attain significant Improvement so that is what we'll be uh trying out as our future steps so that's it from our side uh thank you thank you so much that was very inspiring and now we will have our first faculty Spotlight talk by Tian Chen Chen okay everybody slides yes yes go right ahead yeah thank you for inviting me so hello everyone today I'm going to tell you a bit about The Works that we're doing to apply a machine learning compilations and bringing open language models onto consumer devices and to give a background one of the things we're looking at is now software landscape changing from sharing software to AI right in the case of AIS we have the need of bringing larger data sets bigger models and more compute and there's a question about how do we develop software ecosystem around this kind of new paradigm and the amount and one of the key challenges Here Is How We Do ml system optimization so while we can go and directly call you know apis there's a lot of questions on how you really run those machine learning models onto each environment some of them include things like data centers apus gpus and special accelerators traditionally what people do is people you know Engineers having different Hardware backends they go and build libraries and it's really hard for us to be able to generalize and support that a broader spectrum of of devices that's out there so one of the things that our group have been working on is this area called machine learning compilation so the goal is to be able to take machine learning models and automatically generate code for the power of interest in this case a hardware could be the wrong side of phones around your browsers and runs on your laptop Sometimes some of them also runs on data center devices so specifically while we're saying technology we have been developing on uh recently it's called TBM Unity so this is the open source machine learning compiler that allows you to be able to bring a high-level machine learning models onto any of the environments you're interested in and then so TV immunity is more like a python focused thing that allows you to build a set of ml programs in a centralized obstruction called IR module where each of the components here can be you know visualized in Python and allows you to be able to correspond to some of the components for example in this case this simple diffusion model and you have a vae decoder that that's correspond to the executions and of course one of the key Sims seeing those end-to-end machine execution is that nowadays there's critical to support what we call Dynamic super symbolic shape so that you will be able to support since the variable lens Financial languages and different kind of input size in here so with ir module essential concept what TVM offers is a unified API that allows you to productively import models from existing Machining models like pytorch be able to inspect and interact with the corresponding programs you can go and transform them and bring a more powerful making it around more devices and then you can deploy it onto the environment of choice so one of the key features that we have been pushing for is what we call Universal deployment that means that you write your code and bring it into the compilation flow and we'll be able to build a roundabout function that you can go and go and take it and deploy onto environment of interest that could mean python you know touch environment C plus plus or in certain cases we can also directly deploy it to the browser and or the JavaScript through a runtime that we support so this is like high level background I'm going to tell you a bit about and what I can do is this kind of Technology what specific things we're looking at is how we can bring new foundational models onto consumer devices so there's a blog post that you can go and check out the there are a lot of motivations on why you want to deploy your uh you know large language models onto not only the the service providers but also you know deploy them onto local devices like your laptop or phone for example one or one of the motivations you can certainly bring more personalization so that you can bring models that interact with personal data sometimes you are on a flight or you are you know Off the Grid and you want still want to be able to have your personal assistant with you if you're developing gamings maybe you want to be able to have some specialized models that runs on the game and you don't want to query the internet all the time and finally there's a interesting future about you know if we can bring decentralized devices they're working with each other how would that look like versus the centralized vision so in order to do that we will need to be able to deploy those models onto a large number of consumer devices and you can see all the images here right that includes your laptop your game consoles like steam deck switch mobile devices like iPhone or Android devices and so on the challenge here is there's so many kind of ways to program those in those devices so I'm listing possible GPU runtimes here and if you can't you can find their at least nine here that the correspond to all those environments and it's really really it's also very hard to keep up with those machine learning model assistant development so what we do is that we bring in this ml compilation technology and takes the language model as stable different models and you know apply some of the optimizations we talked about the beginning and that allows us to bring those models onto the environment of Interest so so these are like a high level Technologies and if you're interested you can see it in action so if you go to this link here mlc.amsam you will be able to find uh you know cellular models that you can run on Windows Linux your MacBook uh locally or local no no server support uh for example if you have an M2 device actually we we do have a version that allows you to run llama to the biggest version 17 billion on a latest M2 MacBook of course in that case we need a 64 gigabytes Ram but it's really amazing that you know you can run such a bigger model on the on kind of a local device you have and think about what kind of future you can enable another fun thing we do is we we are building a iPhone app that allows you to bring in this case a 7 billion model of llama directly onto your phone so it is already available on App Store so if you go through the link and download it you can go and try it out you will be able to chat with a llama to model a red pajama model you have quite decent speed in this case you know it's riding important for CMU you can see no it's doing pretty well and we also have an Android version that uh that's that's a seminar thing so in this case really bring it to a wide variety platforms that are interested in finally one of the things we are doing is we are bringing it to the browser so in this case um there's a recent technology called Web GPU that allows you to directly run gpus through the this is the browser client side so there's no server involved you just load the web page and you chat with the chatbot and this is a package of webm so if you're interested in you know building local language model applications you can install npm package and build JavaScript applications around and and you know actually in this case we are also able to run very big llama models depending on the local GPU that you have so besides language models we also have other sense like stable diffusion you know you will be able to run the Civil Division directly in a browser in a very effective way okay so that's the end of the talk and if you are interested in learning more about machine learning compilation we have a website including related courses materials and all the all the open source projects you can participate yeah thank you so much we can take a few questions are there questions from the audience can either raise your hand in Zoom or put it in the chat uh we have a sort of congratulatory note um in in the chat and then a question can you speak more about web GPU yeah so web GPU is this latest technology that allows you to access GPU on your local laptops through a JavaScript and browser so if you're familiar with webgl because there's a previous generational technology right so in this case effectively you're accessing a local GPU instead of running GPU on a server so so it's a client-side technology and the Chrome just ship it officially I think a few months ago so it's officially you know if you are using a chrome you just need to go and open a web page you should be able to you should be able to help you to run on the local device so that's a question of why is ml compilation helpful right so first of all you'll find that so many GPU backend you don't want to build you know accelerated software for each of them so ml compilation helps you to be able to Target all those backhands that you see today it also gives you more optimization so effectively if you want to get the best performance or most of memory out of it that it will get you a better performance than some of the other Alternatives yeah uh there's another question in the chat uh what are the career opportunities in this space for someone with a background in product design who doesn't code yeah so that's a great question right so I think what I was saying is that we can think about is imagine a future right there are different futures from today like one possible future could be you know you're around all the large language models on the on the on the from the big players right uh in a cloud another possible future could be you know there will be personal assistant that runs locally together with the cloud and and you know there are questions about how to enable privacy how do you enable personalizations and think about you know what technological enable is really interesting yeah yeah that's really exciting hey other questions uh I have one question and that is um so it's very interesting that you have your uh these phone apps and um a lot of people are working on how can you compress down models so they could actually run on your phone locally what do you see as the trade-offs in that space do you think you know like where do you think you would put your money yeah so so for example right now we are we are we do need to do heavy quantization so so in order to run the app on iPhone right you need to fit all everything to four gigabytes of RAM so so that's a hard limit and in this case we have to quantize it to three bit which kind of is a velocity lossy compression I think it serves you a very active field to work on and we're also building a foundational tool so that for you if you are working on a new model or compression algorithms you will be able to plug it in onto our framework and you will be able to run the model on the phone yeah yeah there's a comment in the chat about the trade-offs might be different in developing countries where the internet um connectivity is not so great is there one more question or should we move on to the next talk I'm not seeing another question thank you so much Tian Chichen um and I'm so sorry for saying your name wrong earlier um that was my oversight um but thank you so much this was very interesting and inspiring and now last but absolutely not least we're very excited to have our second faculty Spotlight talk by Norman Sade well thank you Carolyn and I think there's a nice transition here from chenshi Stoke to my talk on on privacy as he pointed out uh one reason for trying to build these models on cell phones has to do actually with with privacy issues but uh historically as you probably also realize uh privacy has often been looked at as an afterthought and perhaps also an annoyance by by the AI community and so what I'm going to do in this talk is I'm going to attempt to First explain why privacy matters and what is privacy and I'll talk about my research within this area including how we have been using AI including generative AI to enhance privacy and along the way we'll talk about why privacy is challenging so that's what I hope to do over the next few minutes not quite sure how much time I have Carolyn but I was told about 20 minutes so I'm gonna try to stick to that if I can yes so when I have more time I I like to work people through scenarios that require them to think about the information they might have to provide to insurance companies that they go shopping for insurance policies for their new car we don't have the time to do that but I'll Briefly summarize what typically comes out and I've probably done this about 100 times over the past 15 years I pretty much always ask the same question this is just a subset of those questions but ask people about disclosing how fast they derive self-reported disclosures but also discuss the possibility of them installing a GPS unit that's going to be reporting their speed to their insurance company or using apps on their smartphones to do exactly the same thing and the count of answers that you get vary a lot from one group of people to another and obviously they vary also based on the way in which that data is going to be collected for instance whether it's self-reported or using our sensors and I also go on and ask people about sharing how much you know how well they sleep for instance at night which as you probably realized is often correlated also with the likelihood that you might crush and ask them you know about scenarios where they see self-reported but also as you probably realized with your Smartwatch your smartphone and even sensors that these days can be embedded in your bed or your bed sheets I ask them how they feel about those scenarios and those are you know typical types of Statistics although there's quite a bit of variation from one group of people to another and the reason why I work people through that is to make a few different points and those points include number one not everybody feels the same way about these scenarios so you get actually quite a bit of uh Variety in terms of answers but I always point out and I've done this at least a hundred times there's never been one time where a single individual in the audience has indicated that they were willing to share all the information that was contemplated in these scenarios and so the point here is that everyone truly cares about privacy and that's a point that really matters and the third point is obviously that you know all these data can readily become collected these days whether it's through mobile and iot devices or inferred using Ai and machine learning algorithms so hopefully you're convinced and you sort of try to play this very quickly in your head as I was quickly working you through through these scenarios that I like to spend a bit more time on with people when I have more time available so what is privacy I could you know at least spend an hour talking about privacy but it's an evolving set of expectations and Concepts and rights and this goes back to centuries ago where people started to recognize the fact that people probably have some kind of fundamental right to life and some fundamental right to property and over the years these rights have evolved but the right to just remain alive has extended to have the right to enjoy life which has included for instance if you go back a Centrio uh the right for instance you say hey uh you know that train track that passes next to my home that wasn't there when I bought my home that's extremely annoying that's a violation of my privacy uh the smoke all these sorts of things but also uh protection of your reputation slander libel uh and eventually also looking at property as extending to intangible assets including data about yourself and so this is an evolving collection of Rights truly uh but you know for the sake of this presentation uh first of all let's uh you know just quickly go over a couple of definitions of privacy which is a very very broad concept as I think I've already uh you know shown you uh so this was defined in 1890 as the right to be let alone free from surveillance or interference from other individuals or organizations including the state more recent modern our definitions are basically the right and ability of an individual to Define and live his or her life in a self-determined fashion you might say okay what does this have to do with anything we're talking about generative AI here well we're going to focus more specifically on information privacy uh in a minute uh but um uh you know just moving along and so that you appreciate you know where privacy comes from it's enshrined these days in a variety of different documents the U.N Universal Declaration of Human Rights back in 1948 or U.S Bill of Rights with the first 10

amendments the Constitution nearly every amendment in a Bill of Rights has a privacy Dimension starting with the First Amendment freedom of speech freedom of opinion freedom of assembly and so on the fourth amendment is one that's often referred to it's about warrantless search and seizure the right for people to retain some privacy in their home in their private papers and so on and obviously there are many more specific laws that have emerged over the years so for instance children with Copa FERPA for those of you already seeing dedication are you know in education that has to do with the protection of data about students Grandma this is a session about Finance financial apps and services so Graham leech Valley is the financial modernization act which has some privacy uh Provisions heat path for health but also state laws like California CCPA cpra and a variety of different laws around the world often you hear for instance about gdpr which is a law that has had a very Major Impact around the world over the past five years so for the purpose of AI and information privacy we're going to focus primarily on what people call information privacy and ultimately to keep it simple this has to do with the ability of individuals to retain some control over the collection and use of their information and so in practice this has been implemented through a framework that people often referred to as notice and choice which is basically the idea that there should be some kind of a privacy policy that's going to State what you do what data you collect how you use it and all sorts of other things and you're going to also try to give people some control over what happens their data some choices such as perhaps the ability to opt in to some practices or opt out of some practices or additional types of control which we don't necessarily have the time to delve into in much detail but from a practical standpoint as you work on AI and generative AI Technologies you need to know a few things and you need to know in particular that over the past five years there's been a bunch of new regulations that have been introduced these regulations are much more stringent in terms of what you need to disclose in your privacy policy and they're much more stringent also in terms of the rise and controls that data subjects namely those people whose data you're collecting need to be provided with are not just necessarily opting in or opting out of some uh disease but also the ability to review data collected about them delete that data object to automated processing which is something that you have for instance in EU privacy laws gdpr General data protection regulation and a variety of other expectations and along with these expectations much much steeper penalties have been introduced right so the the biggest fine in this space remains the five billion dollar fine that Facebook uh was basically slapped with by the FTC here in the United States but if you look at uh you know all sorts of other fines uh over the past few years they get very steep hundreds of millions of dollars sometimes and don't think that you just need to be Amazon or Google or Facebook to you know get under the scrutiny of these Regulatory Agencies the small players uh have also been slapped with very severe fines and so that's not something that you can ignore so I wanted to study you know make that very clear but no let me slowly you know get to the point of how can AI help with our privacy and so I've mentioned this concept of notice and shows the idea that you're going to have a privacy policy and that people are going to read this pricing policy and then they might be able to exercise some choices depending on whether they like or they don't like some of the things you said you're going to do in terms of data you collect and how you use that data so number one nobody reads these privacy policies I'm not even going to bother doing a show of hands here I know what the outcome will be so it's been said that only in Fantasyland do people read pricey policies and one of the consequences of the new regulation that has that have been introduced over the past few years is that these privacy policies have actually become even longer perhaps about 25 percent longer so if nobody was reading them five years ago what are the chances they're reading them today and you know especially if you're thinking about reading those policies on cell phones and all these great controls that we're giving to people to restore agency and the ability for people to really you know be in charge of uh in control of what happens to their data just look at your cell phone and the number of different settings that you have for instance just when it comes to the privacy of your mobile apps and I've published this you know some years ago a typical user with you know between 50 and 100 apps would have to configure manually somewhere like around 200 different permission settings to actually you know be fully in control so who's got the time to do that and so at the end of the day all these regulations are great except that they're highly unrealistic about what they're expecting people to do in terms of expertise whether they actually understand the choice available to them the data practices the implications of agreeing to certain types of uh practices people often also like the attention and the motivation because as it turns out privacy is always a secondary task and what that means is that typically privacy decisions that you have to make arise in a context where something else is a primary task such as downloading that game app on your cell phone and starting to be able to play with that right that's your primary task and the pricey decisions that you make along the way those are things that your brain will tend to dismiss because their secondary tasks your brain will tend to you know basically decide that hey I shouldn't be too bothered about this the consequences are highly uncertain let's not worry too much about that and so a lot of my work in this space over the years has really been to ask how can AI help people manage their privacy so if they don't read privacy policies could we read these policies for them for instance if they don't have the time to configure all these settings could we help them configure those settings and those are examples of things that we've been doing and along the way we've also done a lot of work in the area so I'm co-director of the Privacy engineering program here at CMU and that includes doing a lot of AI governance also these days and that means helping organizations develop more systematic practices that will ensure that they take privacy into account and they take into account risks that are associated with using AI as they design their products from the very beginning so there are all sorts of different things that need to be done within that space if you want to do things right and so a lot of my work in terms of helping users has revolved around the use and development of what we call privacy assistance and those assistants are designed to help people understand practices that matter to them without requiring them to read entire text the entire text of policies helping people configure uh for instance settings as far as what data their mobile apps can access nudging people to pay more attention to those decisions that they tend to dismiss because privacy is a secondary task as I mentioned and all sorts of other things that I don't have the time to talk about so examples of things that we've done for instance I'll go very quickly here include and we've been doing this sort of work for about 12 years now we've been looking for instance at the text of privacy policies and automatically extract opt-out choices that were traditionally buried very deep in the text of these policies and therefore choices people are never really aware of and would never take advantage of so we've built for instance browser extensions that are available in the Google Chrome Store for people to add to their Chrome browser and that basically you know make these choices much more readily accessible we've built assistance to help people configure their privacy settings in their cell phones using machine learning techniques to try to understand how groups of like-minded users can be identified and how based on you know how you feel about a few small number of issues we can basically recommend a variety of different settings for you nudges those are Technologies by the way that have actually been introduced in versions of iOS over the years like iOS 13 has introduced nudges like the one that you see here did you know that your location has been shared 5398 times with Facebook Groupon and go launcher over the past 14 days I can tell you that when you show that to someone even though traditionally they may have been ignoring privacy you should have that and all of a sudden they want to or more and they may want to modify modify a number of their settings and then proxy question answering which is the closest to generative AI but before getting to generative AI just to give you a sense for what else you can do when you look at for instance a text of privacy policies is you can look at all the statements that a company makes in its privacy policy and then you can say hey let's compare that with what for instance this app actually does and so for instance if you analyze the code of an app or if you look at the behavior of an app Study called Dynamic code analysis and you compare what you see with what the company might be saying in its privacy policy you often find a bunch of discrepancies these discrepancies are what will get you in trouble this is how the Regulators will come after you and say hey this is misleading we're going to slap you with a 10 20 30 million dollar fine because you've been saying certain things but the behavior of your code is entirely different and so just to give you a sense for how severe the situation is in this space we actually analyzed at some point back in 2018 over a million Android apps on the Google Play Store and we found that on average there was about 3.47 potential compliance issues per app over 600 000 apps had compliance issues so this also gives you a sense for how challenging privacy is for your regular developers and that obviously extends to people working in generative AI so uh most recently we've said well if people are not going to read the text of privacy policies right uh could we potentially just you know allow them to ask whatever question is on their mind look at the text of the privacy policy and perhaps other things and then you know automatically answer their questions so examples of typical questions we've collected very large corporate questions include things such as does this app share my location with advertisers which is a pretty simple question but also should I be concerned that these app shares my location with let's say analytics companies and some number of these questions these days thanks to some research we've done also over the past 10 years are directly addressed now in what people call privacy labels you might have seen these in the App Store but about 50 of the questions people had are not addressed by these labels and these labels honestly are already way too complex and most people are not even looking at them and so we've asked ourselves you know how could we potentially you know answer these questions so people don't have to read policies they don't have to look at the labels let them ask what's on their mind and see if you can answer these questions and so we've played with you know chat GPT we've played with variety of llms over the years and there are a number of challenges that need to be addressed so my my talk here is not about like we've solved all these problems and it's it's done it's more about here are some of the things we're doing and here's roughly where we are so for instance issues that you have to deal with when you read the text of privacy policy is that the policy might be referring to your GPS data being collected and then you've got to obviously make the connection that hey GPS data is for instance a subclass of location data but they're also much more subtle types of inferences that you need to make so for instance if a policy says you know we ask you to tell us how you go to work and whether you're driving or biking or walking as it turns out this information can also be made used to make inferences about your health whether or not you exercise right and then beyond that and this is one a huge challenge that shot gpt's Turbo with right is the fact that because privacy policies are written by lawyers lawyers in general will work very hard at not telling you things don't have to tell you and so there's a lot of Silence about questions that people have in the text of privacy policies and the question is how do you interpret that silence what sort of inferences can you make about that and so depending on the regulation that's relevant to the situation of a given person there might be regulations that say if you do this you've got to disclose it and there are other places like Pennsylvania where most of the time you can do things and as long as you don't disclose that you don't do them it's actually okay so we look for instance at you know typical questions that people have like does this app share my credit card information and you know about 16 of the time right the text of the privacy policy will not tell you it's going to be silent about that issue or 50 of the time the answer will really be only implicit and so the challenge that we've got to deal with within that context are how do we interpret silence given what regulations require you to disclose and how do we turn all that into a meaningful answer for users and we've actually had some success at representing this knowledge and then adding this as an extra layer on top of chat GPT and similar types of solutions so I'm going to quickly wrap up because I feel that I'm probably running out of time I see Caroline starting to get perhaps a bit nervous or she's doing a very good job at not pretending that she is but uh the the the most recent work that we've been doing in this space has been looking also at how do we produce personalized answers for people so answering even these privacy questions as it turns so you can come up with generic answers but that's not terribly valuable you want to adapt to what your user knows uh this includes for instance the age of your user their gender as it turns out attitudes towards risks so does the user actually realize that you know if they agree to this practice they're exposing themselves to all these potential risks or do I need to potentially let them know and Beyond just answering questions could we somehow proactively tell people that hey you forgot to ask me this question but you probably want to know about this stuff and how do we use generative AI for that and so there are sorts of challenges I don't have the time to talk about in this space but one reason why this is so challenging is that answering the same question in different ways has a very different impact on what people will understand

2023-08-27 23:32