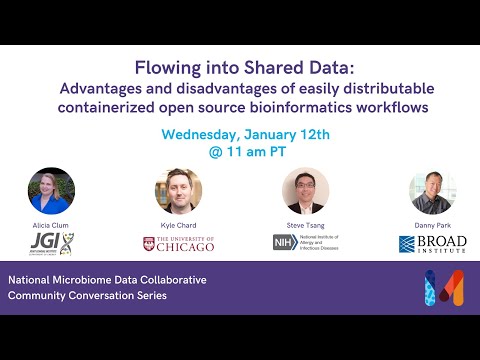

Community Conversation – Flowing into Shared Data

okay so hopefully everyone can hear me and see my slides okay um welcome to the fourth iteration of this conversation series uh from the nmdc um today we'll be talking to you about uh bioinformatic workflows um uh less the specifics of the workflows and more some of the challenges some of the advantages disadvantages of providing uh these types of containerized workflows and enacting them for for microbiome research so our agenda today is outlined here i'll be giving you a brief introduction to the nmdc the national microbiome data collaborative and some of the bioinformatic workflow efforts that we've conducted within our our project our pilot project today we have uh four uh expert speakers i'll introduce you to them a little bit more later but they're listed here of note we will be hosting a large panel q a session there's a shared note document that should be provided to you in the chat box feel free to enter all your information there um questions uh or even your emails etc um [Music] all of this will remain editable for 24 hours and then they this recording as well as the document will be viewable and available on the nmdc website so i'll start by mentioning what most people already know those of you who've been in genomics or in microbiome research for some time there's we've been undergoing a pretty dramatic shift in terms of the volumes of data that are coming out of you know newer technologies in terms of all flavors of omics data and they're quite varied in terms of the the types of samples or the types of questions that people are are looking into and generally speaking from a microbiome research perspective you're interested in putting your data into perspective with everyone else's but access to these data can be somewhat challenging in fact you can spend the majority of your time trying to find other people's data that you can use to compare with yours and then there are further challenges in terms of uh how comfortable are they truly so our effort in the nmdc is to change this mantra to into a situation where you can readily search for for other types of samples that fit your types of questions you know if they're in a particular animal model or if you're looking at a particular geographic environment you can find other data sets that might be useful for comparisons and then see what other data is available and then access those data so apologies um the nmdc is a pretty large multi-national lab effort out of the department of energy that is really trying to address this question quite broadly so our main mission is to provide you know this fair access to data this findable accessible interoperable reusable data and apply this to all flavors of microbiome data we're focusing primarily on initial varieties of omics data generated by sequencing machines or mass spec instruments and our but our goal would be even broader than that we have a few publications including one that pertains to bioinformatic workflows that has just been accepted and you're also welcome to to browse our main sites for any documentation or any of our work conducted so far um so we have a live portal website that's currently available it it's not populated with a tremendous amount of data in part that speaks to how difficult it is to link all the appropriate metadata for a sample or metadata for a data set to all of these raw homicides data sets but this is a vision of what we may want or may be able to uh to do in the near future the goal is to be able to search for any key term all these metadata are organized in a formal ontology we have a schema that we've been refining and merging across a few different ontologies if you're interested in ontologies themselves there was a conversation as part of this series several weeks ago and that recording is available on the nmdc website as well once you are able to find your data sets uh you know you may want to narrow down which data sets you're actually interested and then you should be able to get access directly to those data sets right now you can do all the searches without logging in or registering but you'll need an orc id to to register and then you should be able to have access to downloading data sets and results um that are provided uh in this website uh with all the requirements on how best to cite those data so finding the data is certainly a key part of the nmdc pilot project another key component is to then be able to allow cross comparisons acro across different types of data or different environments so that you can put your project into perspective with others so this requires a little bit more thought on the bioinformatic workflows which is the topic of today's conversation in the nmdc we focused on established workflows that have been working and running on a large number of projects at the joint genome institute as well as the environmental molecular sciences lab at pnnl the emsl and so we've first tackled a variety of typical flavors of omics data there are uh innumerable workflows uh available out there so we've had to pick and choose to work with some of the ones that we that we're confident will give you some best practice answers we've had to rewrite all of these of course because every everyone's suite of tools or workflows their pipelines are all you know pretty much dedicated to their own environment and our goal really was to make this a lot more uh interoperable so allowing any user to grab these workflows and produce the same results that we would be able to produce either in the cloud or in any given institution so in our hands we've run a number of tests and evaluate evaluation or validation on these workflows we've run them in a number of different venues and then compared results with at least two different data sets which are also available through the either the our through our main github site we also have made these workflows available um in a couple of different venues one is the nmdc edge link that i've provided on this website this is a interactive gui run capability where you're able to upload your own data and process them yourselves this will also be made available via the doe k base platform so the workflows being done is certainly one thing but we are also thinking about how to scale and how to manage these data writ large so uh we've been developing an automation api that would allow us to register different sites uh for where the data is located um to register samples so that you can standardize and have all of your metadata be searchable and then once you have those data we have two initial nodes at nursk which is at the at the lawrence berkeley national lab that is coupled with the joint genome institute and so has access to those data and so all of the metagenomic or meta-transcriptomic workflows would execute there and at the emsell lab we have a number of workflows that will work there for the mass spec data these would then be registered all the data products all the analysis outputs would be registered in the central store which could then provide you access to to those results as well and make some of those results searchable so uh today's topic really revolves around workflow best practices grand challenges less to do with the nuances of each workflow like which bioinformatics tools or what parameters or what thresholds do you use but our we have a broad range of expertise in our panel we have alicia klum who oversees a lot of the genome assembly and analysis at the joint genome institute i've known her for years she's excellent and runs a number of these projects in the cloud as well as locally on their hpc kyle chard he's a research assistant prof at um at university of chicago in the computer science department and he co-leads the globus labs effort um this uh pla there's a globus platform that really enables large data sharing across you know anywhere in the world and uh is being used by a large number of groups to to enable data sharing and management we have steve sang at nih he's both part of nyad the national institute for allergy and infectious disease as well as the office of data science and emerging technologies which i know is a mouthful and he'll be talking to us about best practices at nih for some of the national cancer efforts and we have danny park at the broad institute he's a group leader in the viral computational genomics effort and he runs a large number of projects as well he's been cornerstone in uh in developing wdl and cromwell which is a workflow manager which we use in the nmdc as well so i welcome and thank uh our invited panelists and i will let you take over and introduce yourselves uh a bit further and as well as your ideas and any key messages you want to rely on in terms of workflows oh and i guess we'll start with alicia yeah thanks okay thanks uh my name's alicia and as patrick mentioned i work at the joint genome institute and i'm going to be talking about leveraging containers nwdl for metagenomics workflows at jgi next slide please so if you aren't familiar the jgi provides the global research community with access to advanced genome science capabilities for advancing solutions in bioenergy and environmental grand challenges next slide we have a couple mission areas including bioenergy carbon cycling and biogeochemistry we do this through a couple of science programs and then capabilities both instrumentation and computational so today i'll mostly be talking about the metagenome program and how we do computational analysis on the sequencing data that we're generating from samples that are sent to the jgi next slide so here's an overview of the workflow and here the lab what's happening in the lab is shown in green and then computational analysis is shown in blue so generating meta genome drafts is one of our highest throughput products at the jgi so we're processing thousands of metagenomic samples per year the workflow that we ended up with combines representation from the lab informatics and then the science program leads really represent the users and the focus here is really on automation so there's a lot of plate-based work in the lab and then in terms of on the computational side we really need to find defined workflows and processes that that don't change so that we can process thousands of projects a year without having a large labor or overhead next slide this is a detailed figure from a paper that we recently published in m systems which shows the details of the workflow so you'll what you'll see here if you're familiar with any of these tools in almost every step we're using a third-party tool that's not developed by us so really what we were looking at is kind of chaining together these tools and this so something like you know wdl is a really good fit next slide so our strategy for portable workflows is very similar to what the nmdc is using so tasks and workflows are defined using wdl as patrick mentioned sometimes we need to run in different places so what we've done is we write the wdl as if we're running in docker and then if we're running somewhere like nurse or other places that they might have different container management software for example nurse run something that's called shifter we use a separate config file that can be passed to cromwell to handle those different environments that swaps out docker for shifter or whatever container management tools being used and then we're using docker for software management so a lot of these containers are already available on github and they can just be pulled we found that this is pretty robust and then we're using cromwell to execute these workflows and that's why great thanks and we'll move to kyle i believe awesome thanks patrick hey everyone um i guess first up thank you for the invite to come and participate in this panel appreciate it um as patrick mentioned i'm a researcher at the university of chicago i'm also appointed at argonne as well and i sort of straddle the two worlds as best i can in reading the abstract i thought there were a few systems that we build uh so i'm i'm more of a system builder than a workflow developer we build systems to enable mostly large-scale science problems with large amounts of data or large amounts of compute so i thought of some of the projects i'm involved in that might be relevant for this uh this panel the first being parcel which is a parallel programming library for python that i'll speak about globus which patrick mentioned before is a research data management platform it allows you to very easily move synchronize share even search data um in different places and then function which is our um federated computing platforms you can think of it as like globus but for running functions or service tasks in different places next slide please so one slide sort of on my background so you understand probably some of my perspectives throughout the panel um we've built this parcel programming library for the last few years probably about five now um our approach has been very much to take a programmatic uh sort of approach to the problem so rather than think of workflows as sort of declarative specifications that tend to get obsolete or you tend to need to jam control logic into them we thought well why not let's start in a programming language where we can enable sort of workflow semantics through constructs in the programming language the benefits we think are that really workflows are programs and we need to be applying the same types of sort of processes to looking after them to building them to versioning them etc as we do with any other software um our puzzle libraries used uh relatively broadly i've listed a few examples of biomedical domains there some omics work but imaging a lot in places like cosmology and material science and other places next slide please okay and so i was asked to answer a couple of questions here so again probably to level set where i'm coming from how do we define workflows so in our world workflows are defined in python where there are some constructs that allow uh you to string together components to create workflows to execute in parallel we think this is useful because python's fairly broadly understandable there's obviously the great language support you can do almost anything there's the the rich ecosystem of libraries and tools around the edges to help you build up the types of analysis you're doing and of course you can leverage common best practices software engineering approaches what a workflow what does workflow use look like for us we work a lot with the national labs so we run a lot on supercomputers but we also work with institutional clusters generally sort of hpc systems um workflows for us run from a bunch of things that run on single nodes to thousands of nodes sort of small single core tasks through multi multi-node mpi tasks things that run for short periods of time to longer simulations containers of course are very popular but like alicia just mentioned we have to deal with these different container technologies on each of the different systems we work with who runs these workflows primarily it's people that want to parallelize their codes often people that start in python and want to go from their laptop to a supercomputer sometimes people that have workflows and want to move to python because they're struggling with having to throw a bunch of javascript into a declarative specification um our users are very diverse in terms of how often we deal with them sometimes they run things once a year sometimes they run things once a day and data really is is very broad so i think i've basically said everything in this slide but we can we can dig into the details as we go thanks okay we move on to steve okay thank you thank you patrick and the organizer for the opportunity to be a part of this discussion my name is steve sang and i'm a health scientist and a program officer in the office of data science emerging technologies here at the national institute of allergy infectious diseases so a little bit about our office uh the office that science emerging technologies or asset for short builds partnerships across nia do to harness the power of data by coordinating nid's data science portfolio and lead the planning execution of trans nid data science research programs activities and related initiatives both intramurally meaning within the nih and extramurally meaning with the research community so about six years ago prior to joining niaid i was at the national cancer institute and my work involved the research evaluation of the nci cloud resources which i will discuss today and in that capacity i developed containerized tools and workflows mainly for microbiome metagenomics pathogen and micro related analyses and collaborated directly with researchers users like yourself to migrate data and analysis to the cloud so some of the software packages i worked with included at that time like software packages like mother chime blast sra toolkit as well as other user developed tools so today i'll be sharing with you um from that perspective next slide please as a quick disclaimer since i was working on the research evaluation um in this presentation it was all my my own view and do not necessarily reflect the view of the nih or the government next slide please so uh crdc the order nci the national cancer institute cancer research data commons is a cloud-based data science infrastructure that connects petabyte scale data with analytics tools to allow users to share integrate analyze and visualize nci funded research data to drive scientific scientific discovery the crtc provides access to data specific data type specific repositories as shown in on the right hand side in those databases in blue white and light green including genomic data proteomic data comparative ontology imaging and more so the nci cloud resources which show on the left hand side of the screen in the cloud icon there is a way is a component of the nci cancer research data commons that allow users to access those data in the cloud so cloud resources also provide access to on-demand computational capacities in commercial cloud providers like google cloud or amazon web services to allow users to analyze the data and also cloud resources provide support for data access through a web view web-based utility user interface in addition to a programmatic access using apis and and such so for me a lot of what i did at nci was to develop scientific use cases but with end users um leveraging the nci cloud resources to do microbiome microbe and meta genomic related work and currently the nci cloud resources are hosted by the broad institute the institute for assistance biology and the seven bridges each cloud resource has developed a unique infrastructure with a variety of tools to access explore and analyze molecular data next slide please so i was also asked a few questions here i'm just answering one how do we define workflows since we have three defined nci cloud resources the definition varied slightly but the idea is very similar let's start from the bottom the seven bridges uh cancel gateway in the cloud the idea of apps include both tools which are individual bioinformatics utilities and workflows which uh basically are changed or pipelines of connected tools and for the civic bridges platform they support the common workflow language so moving up one for the institute for systems biology or isp cloud that platform is intentionally designed to be a lightweight infrastructure on the google cloud platform so it's really a workflow specification agnostic and then you can leverage any workflow manager that's out there and the users have at their disposal all the tools and technologies that the gcp has to offer moving to the top the broad institute i think danny will also talk more about this the broad institute of fire cloud or terra uses pipelines or workflows in warfare description language for the processing of data so i've included references for all those three cloud resources feel free to check them out thank you very much thanks steve and next we have danny park thanks and thanks so much my name is danny park and i'm at the broad institute um why don't we go to the next slide um i was asked to say a little bit about kind of what our group does i'm in the viral genomics group um which is the research group and i oversee a lot of the computational methods development analyses in this group um our group mostly tries to find something at the interface of research and public health where genomics and viral metagenomics informs kind of response to to viral outbreaks and things like that and you know uh usually in years past even during big outbreaks like ebola zika and things like that i usually have to kind of explain to folks why viral genomics or metagenomics was actually kind of a useful applicable thing epidemiologically in these types of scenarios um i actually feel like one thing copenhagen did is it kind of broadened the general understanding of ways in which genomics can be useful so i'll move on from here and maybe we'll go to the next slide um a little bit about just uh from a really high level what kinds of workflows we have so over the past decade or so we've endeavored a lot to move a lot of our kind of in-house research tools for viral genomics and metagenomics into um uh to get them out of our group to get them out of the road to get them to our partners and i'll talk a little bit about that and so but you know under the hood what's happening there it's the same thing any of the uh other presenters here kind of talked about at least on the viral focus we have viral assembly tools uh particularly de novo tools for a lot of the trickier viruses uh but also reference based assemblies as well metagenomics public genetics things like that um also tools for you know uh data release uh ingest for minus dc so on and so forth but as i said the emphasis here has been kind of on um portability and and use by some of our collaborators and currently yes we we also you know obviously we write these in the workflow description language that the road uh uses a lot or little as we like to call it but the key here is it's been important to us that it actually executes properly on multiple different platforms so initially for the past i'd say like seven or eight years our partners were primarily consuming our workflows on the dna nexus commercial uh cloud computing platform they're a lot like seven bridges in a lot of ways um in the past couple of years uh at least in-house we've transitioned a lot of these to terra we also use czi's mini little uh for a lot of different you know kind of dev and just kind of quick one-off use but the point is i think our emphasis has been trying to make sure that these actually do work fine on all those different uh platforms let me go to the next slide um so for us uh as i said our primary users for this uh a little less than a decade ago we engaged uh in an nih h3 africa project to uh help uh kind of spin up pathogen genomics and metagenomics uh laboratories in a couple of key sites in west africa and so this has kind of launched the next several years of a really fun collaboration of uh setting up a lot of kind of pathogen genomic capacity in west africa and then there's a lot of the work here translated actually to more recent kind of efforts and collaborations we had with public health labs domestically as well but this is to say one of the lessons we learned over that time was you know although our our group probably uh more so than somebody else we have an emphasis on cloud as the primary platform that we try to execute these workflows on part of the reason we did that is that we found that it got it into more people's hands faster and more places faster especially places that may have not had a lot of the computational or even electrical infrastructure uh to do it otherwise but um it also just kind of widened the user base even uh closer to home so in our own lab prior to containerizing and clarifying a lot of these pipelines um you know a lot of the compute had to be done by the compute computational people the experts who are kind of good at that kind of stuff we found that actually as we transitioned a lot of this stuff our wet lab bench scientists are the main people who are asking the questions the people who are generating the data uh they could start actually uh interrogating it themselves and so it actually um it widened it and so the reason i say all this actually if you go to the next slide is um you know kind of one of the questions is like how do we choose how like what to implement how to implement that kind of stuff a lot of what exists today from our sets of pipelines are kind of an outcome of several years of just evolution iteration and feedback from the people who've been using it in the field close to home and a lot of times if there's like a certain project like a certain outbreak or even a certain use case it just highlights oh i wish i had this feature i wish we could change these knobs we need a new kind of functionality for this and so that's actually what's driven the kind of software development of a lot of this so uh next slide um oh the one thing i did want to say you know we're not the only ones here in this panel who who you know use uh geo4g each kind of promoted languages like wdl or cwl or any of these other types of things but um one of the things we did make a point of doing was actually trying to distribute these pipelines through one of gm4jh's tool registry services so the docs door is kind of the the flagship implementation of this and what this does is um if you're not familiar with it you can go in some other time because it's kind of a you know but it's a it's a hub not just for you know in the same way that like docker hub or quay could be your hub for containers and github could be your hub for like raw code uh what about a place that actually kind of is the hub for the actual uh containerized workflows themselves whether they're written in next flow or galaxy or or wdl um it can it can be in this place and it can actually have integrations the the strength is that it actually has um integrations and apis that allow cloud platforms that know those languages to just automatically type them up so you see like this launches button right you can just click on the dna nexus button and pull my pipeline into there or whatever that kind of thing um so that's how we and the other thing that it did is it gave us actually um an easier way to give people access to different versions of because it actually has this sense that like a user can decide they want to pick the latest and greatest you know master branch uh version of the pipeline at all times they want to run our latest code or they want to like pin their their pipelines to the version 2.1 of what i released a few months ago or you know if they're asking me about some bug to fix uh they can run my dev branch that i just opened up today or something like that so that it kind of gave us a conduit to give people access to different versions of stuff like that too so last slide um i think i was just asked to comment just who uses this i spoke a little bit about collaborations and how how do they run it what scale i don't actually i can't speak actually to like how what scale a lot of our collaborators use it as um i i do think actually i think we have someone from the agent on the line here who might be able to speak to some of the other use cases domestically at least but at least you know obviously we use it in our own house and um for us we uh have been using these workflows to uh you know the most heavy use has been first our cdc surveillance contract that we've been doing over the past year where we'll sequence anywhere from five thousand to ten thousand uh starts with two genomes per week uh so that's like a dozen overseas flow cells that we're analyzing and that's at a scale where you kind of wanna automate as many steps there as you can and so um and so we did i can talk a little bit about that later but that's just to speak to how it's being used all right sorry i don't want to take too much time well uh thanks a lot that was good to wrap up to this session and a lot of the latter part speaks a little speaks very clearly to some of my group that have been using the essentially the same suite of tools um so i think all the speakers you guys have done a wonderful job navigating actually a variety of different kind of use cases and probably represent a large swath of different attendees so again i'll welcome everyone online to participate by going to the shared notes document marissa if you can post that again um and uh put you can post your questions there you can also do it in the chat and i think i'll just start off um you know with a general question for for most of you i you know just like with anything else uh you know you're using workflows in a very s sometimes in a very specific manner like you develop one type of workflow for a particular situation or a particular project so i you know it's it's hard to to ask anything that really covers broadly everyone outside of you know do you see like room for improvement you know danny spoke of you know the this iterative you know i i wish i had these extra buttons and you know bells and whistles to this particular workflow and you just iterate on that's that's like improving code or improving an algorithm you know but is there something broader in terms of um distribution of workflows you know if you've if you've managed to distribute your work your work workflow either using something like you know one of these hubs where you can distribute the workflow itself or if you just do it in containers or is is there any lessons learned or better ways to disseminate you know kind of a standardized way to process data um that you've learned over the years throughout your own trials and tribulations and i think we can just start with the order of presentations if that's okay with everyone or you can pass if you don't if you've if the answer has already been covered by others uh yeah i can start we're just using um git lab right now to share our existing wdls um i do think there's some work to do in terms of standardization on tasks so that they can be reused between workflows in terms of you know importing importing tasks and we've been pretty happy with wdl overall um so in terms of improv i think there's lots of areas for improvement i think containers have actually been a really um sort of needle moving type technology for us especially with the widespread adoption and the familiarity that researchers have gained with containers i think they're now fairly comfortable for people to use um i would echo what alicia just said around versioning github so as a sort of mechanism it's again widely adopted i think we should be looking at methods that are sort of broadly adopted elsewhere and trying to use them for this type of purpose but in terms of sort of workflow specific problems i think portability is still a fairly significant challenge especially in the doe world which i world i mean unders between computing facilities is not nearly as easy as you would imagine it to be even if you're told it's going to be easy with whatever you're using um container technology between systems is different how you even build containers on these systems depending on underlying hardware system level stuff mpi libraries etc portability is a bit of a dream to some extent in that world but i think we are starting to at least move things a little bit further i think things around continuous integration and testing in these different systems is a pretty big problem i i think workflow systems themselves probably have very little faith that their system or the configuration for a system will work on any given day in a particular computing facility outside of say an environment we have very uh tight control schedulers change um options on schedule has changed configurations et cetera so i think pooling knowledge there would really help us in terms of portability i think it's probably enough of a rant for me i'll pass to the next person thanks guys so from my perspective i work a lot with the end users as i said in my introduction and a lot of those end users are less computational savvy than probably most of the audience here today so but from my perspective i notice there's a very steep learning curve either getting into containers or getting into a computational workflows it can be really daunting for someone who's new to the field so for example containers even for those who have some understanding of counterization if you really want to scale it up but managing orchestrating containers for multiple micro services can still be very challenging so for me i think the number one thing i've learned throughout the years is that there's a very steep uh learning curve the other one i've also noticed is documentation that's a little bit related to kyle's point about continuous integration and you know just putting your tools code or uh work or even workflow in in a sharing environment how well can you how would you document what are some of the best practices in terms of what's included in the documentation a lot of times what we found is it's really not clear if you're trying to use other people's uh containers what exactly is included in that what version of everything and how the tools are version controlled so to me that's also a big challenge and also full containerization as a technology we all are aware that security is an issue you have to run docker for example as root and you cannot run that on a lot of huskers probably most of the hpcs so exactly how secure containers are and what kind of data that you're analyzing especially for computer access data that's also uh what we faced um i'll just say that i've um you know i i ended with a little just a bit of description of how we're kind of distributing it and trying to achieve a certain amount of portability now obviously the extent to which that actually reaches the ideal requires a lot of kind of end user testing and very you know um as was mentioned is is not actually that easy to automate and it's partly just kind of understanding what the rough edges look like when you try to run this on that platform versus that platform and that kind of thing so um but i you know i think uh in terms of just kind of uh the the reuse and the the you know getting it into a wider user base and things like that i think what i've found is that you know we can work pretty well with the collaborators we work very closely with and have like the euro but then when uh you know in this past year as we uh started to uh deploy to a larger audience in domestically uh in u.s public health labs we partnered with uh i mentioned this before theater genomics um and a lot of the the work there was actually translating some of this stuff like kind of the the user's inputs and outputs and the just the the feel of it where the guts may actually be all kind of the same um but the user expectations of like well i have this file or i want that file and so it's this kind of funny thing where and it's um you know i'm thinking of what alicia said was like wish there was a way to somehow commonly um uh just maintain or share the tasks themselves like the little unit pieces that actually are the same under the hood for a lot of us um and we shouldn't have to independently maintain but the dressing on it uh like the actual way that you spread it together understandably might be different for this lab or that user because you know they always like to run this step for that or they always you know so um so yeah that's just well thanks um yeah there are now a few questions coming up i'll encourage others to chime in or do plus one on any questions you you really want to to hear i'll i'll read one of them in the chat um so and it's regarding versioning um so versioning workflows parameters making them portable is one thing but how do people approach versioning if you will of data which the workflows work on and the data that's being produced by the workflows that's a million-dollar question in a way right i mean i assume that's kind of like reference data databases and things like that which a lot of these tools will depend on whether it's a metagenomic data blast database or some you know reference genome or or some other kind of reference that um yeah i'll i'll clarify that question so um this is robert michael i'm chief data architect at oronl and previously came from st children's research hospital uh where i was leading a bioinformatics group and we actually were developing workflows right on the cusp of whittle and cwl all coming out and stuff so good to see the progress here um and you know definitely one of the issues that we came across of was you know actually making sure that what whatever species or if it's if it's human if it's dna exo anything like that um setting up automation to be able to uh make sure that these things as they're automated as jobs are finished as mapping is finished qc is finished any kind of downstream calling is finished that these things are uh well uh well categorized has metadata attached to it and then as we have top off or if we have any contamination and have to re-sequence or something like that but that's all done and it was a lot of work to make that you know quote-unquote automated if my team did a whole lot of that um automation behind the scenes i'm just wondering what the best practices are uh today and if there are some more widespread solutions to that i can start i guess so we have an internal tracking system which assigns ids and then analysis that like flows wraps up to a higher id that's the same so if i if we have an assembly in an annotation those have different ids for those steps but then they share they share an id as it's you know work that's grouped together in terms of metadata we're using um a database so it's something internal that's called jmo which i think maybe we're trying to make open source but we used that to track any and all metadata and we found that having it not be a you know an sql database makes it very flexible then there's also a lot of overhead in terms of you have to be careful about what you name your keys and that different groups aren't using different names for keys that actually mean the same thing so um there's it's kind of a double-edged sword there but we found that that's actually really powerful and as part of that we're specifying inputs and outputs in the metadata and so that helps with data provenance i'll just say that i think my read on observing how a few different kind of platforms do it um you know i think it's seen as an orthogonal problem to the workflows themselves like that there's a certain data architecture that needs to be built that tracks provenance that does all those types of things um and it's it's it's outside of the the workflow code itself it's it's part of the system that you're running the workflow code in i guess that's i'll say one more thing announce that but i guess that was kind of a question you hit nail on the head there is i was always a big you know proponent of the the workflows should really be metadata associated with the data right so i mean like like you should have you know the the input parameters you use for cutoff or anything like that um to actually you know once you once you create your data the workflow may change over time and so you have to you know just like you have to track you see icd for your workflows your your your the data should should have the workflows that were used along with it to be referenced for it so i guess that's where to me um it is orthogonal but i guess i'm kind of raising the question like should they be and are people approaching that problem um but maybe that's a bit too off topic and if that's different conversation then that's right i think you're right that it's at the intersection in a way it's in my mind part of the implementation of the execution engine or whatever is orchestrating it is is somewhat responsible for at least providing a user the ability to trace those things through the versions of the workflows you know the containers that whatever that uh the input and things like that and a lot of them do have the ability because a lot of these execution engines are oftentimes doing like little call catching tricks and so they're actually tracking number of these things are they exposing all of it to the user in a way that you can grab at it this kind of question i personally thank your questions uh entirely within scope so tracking versioning is and provenance is uh essential it dovetails and one of my uh other canned questions which essentially is you know when when is it necessary to recompute uh your data and that has a lot to do with some of the native you know the majority of all bioinformatic tools are comparative in nature and so when the reference database is changed then all of a sudden your analyses could potentially change as well i know that's a really tough question to answer in in your case you know i think for provenance we whenever you're validating samples and results you know i think we should start at the sample level where you're tracking the samples from which the data comes from and if you're redoing analysis on the same sample or another process on the same sample as long as you can link it back there that's that would be good practice metadata for every workflow and every reference database so the version control all that is exceedingly important anyway if any of you want to come into this larger question of when it when should you recompute data about it i would be interested in knowing i mean i i i can probably share some not exactly to uh to your question but a little bit about the question that was posted earlier and i think danny had a great point about really a lot of that is on the design of the the platform on the seven bridges platform for example um data the platform is basically organized in different projects and within the project you can add all the data you need and then you can run the compute you are able to track every um input output and the province and also intermediate files with tagging features very solid features that allow you to kind of trace along where those files are produced at which step of the workflow so it's getting there it's not perfect but there are solutions out there anyone else yeah alicia yeah i don't think anyone wants to take the bait on your question patrick but um i'll just say in general at least for assembly if there's just kind of a minor version change we don't generally go back and redo everything um when new versions of you know ppm or whatever come out they do try and um reprocess those um [Music] generally we have plenty of work to do going forward so we try not to make that mountain of work even bigger by going back and redoing everything so um it just kind of depends but it is a big issue especially with metagenomics when you know you're you want to do a lot of comparative analysis um so it's really a balance that we're trying to figure out especially with things like time series like things are changing all the time with the instruments and the library protocols um so i think it's in some ways it's not if you have something like a really big time series that's just um something that you have to take into account because there's no way that there's there's variables there so there's there's kind of no no avoiding it um do you does anyone here have a procedure to do this it's kind of like a comparative meta genomic effort where you're trying to assess you know how things change either over time throughout your samples or when you're recomputing it if with either a different database or with a you know a new version of a tool that's said to be you know much better in some way are you saying do we like actually do the regression test ourselves when there's a new [Music] yeah yeah that that not so much i mean i think a lot of times i kind of hope and trust that whoever's handing down the new mandated version of the database um did their work uh and you know similarly like we we will rerun old stuff if we have to for the project save a lot of times for you know the public good safe once our data is out and released in the world um you know the primary data then you know uh it's kind of on everyone else if they want to reinterpret it right but um but yeah i we don't have a process for that that sounds like the right thing to do though okay i we may only have time for one more question but there is a question about the barrier to entry for the average um biologist or researcher i guess and um you know for any it person unfamiliar with you know either the specifics of whatever containers or workflow managers that are being uh espoused by any one group um plus all the dependencies associated with you know whatever uh queuing system or anything that's specific to to the institute is there what solutions exist right now or is there any solution to make this a bit more seamless for [Music] for the community both to jump in as well as to keep current with you know the best practices or is it just evolving so fast that it it's uh you know you learn something and then you'll forget it in in a few months because we've moved on since then i mean i'll just say that it's one thing if someone if there's a very entry to people uh writing uh pipelines and work so but i think that's a little different it sounds like this is more question about consuming and using and executing workflows and i think that's exactly why we for a while had uh initially favored cloud platforms knowing that these pipelines can run elsewhere too um especially for places that had limited ability to support that um you know there's numerous commercial cloud vendors that's just you know i mean your credit card and just start computing or um and and part of it is just some of the training sessions kind of walk people through like here's it's actually it can be pretty point-and-click for certain types of routine analyses um and it doesn't actually have to cost that much but that's just to get started yeah i think from my perspective i'll be very quick uh it's important to get as close as possible to the people that are actually running the workflow both in terms of how you integrate with their the tools and environments in which they're familiar with working with and also how you engage with them on a sort of a lot of these types of development activities are very much pairwise or collaborative sort of engagements and i think it's fairly important to really work closely with the people that are going to be running or building these things i just want us to add from the perspective of nci cloud resources i think all three cloud resources has a very extensive documentation on how to get started using containers using workflows on their uh platforms and we understand that moving from like hello world examples to protection level analysis is really not that straightforward but it's very useful in my opinion to have those examples uh for folks who are new to this to uh to kind of understand what's needed to start running analysis and the other thing is to include benchmark information especially for cloud computing cost is a huge issue people who are new to cloud computing they have no idea how much it's going to cost to run a particular rnc example or workflow so on some of our cloud resources they include information such as what kind of vm you should use or you can use and that's incur how much cost so i think that type of information is going to help people get started i'm glad you brought that last point up i do think this is key to the average researcher not involved in kind of a standard you know people aren't now involved in stars kobe 2 you know maybe they have a solution in place cloud or other but if you're talking to an average researcher with a brand new project that's generating a ton of data where do they bring that data how how much will it cost them uh and i i think that's a consideration that's um it's not often discussed when we're discussing cloud computing you know it's sure it's a solution it's just it's unclear often like how long will things run you're running a meta genome assembly this is um hard to estimate there are estimators out there but even they take some compute so um so marissa do you mind putting up the last slide so you know i'd like to thank our panelists again i i believe that the this topic is actually extremely broad and could cover you know obviously well more than the small hour that we've given it but i thank all the speakers for sharing some of their insights with us um we will continue this conversation online uh the the notes document or the shared document is available to continue asking questions if you have you can uh feel free to uh sign up to nmdc you know we do care about this community engagement quite a bit we have a pretty large team that focuses on this like kyle had mentioned you know i do think it's important to interact with the actual users to see what uh what is actually the most useful for for each and every project or group next uh the next community conversation will have happen uh february 2nd around science gateways um and you know please join us signing up to our newsletter or becoming an nmdc champion and i think that's it for today thank you very much thanks thanks everyone thank you you

2022-01-19 01:58