Deliver better Insights with SQL Server 2019 Big Data Cluster for data edge core and | BRK2382

Welcome. Everyone. Thanks. For joining our presentation, so, I am Prasad ring toucher product, manager from Lenovo data center group. So. I focus, on big date database and big data analytics have, joined by Travis, right I. So. He's, a principal program manager, and is well known in the circle Server circles and as well as he is leading the big data product, so. Sorry. The. Presentation, for today is how to deliver a better, insights, for, all the data that is getting generated at the edge core, and cloud using, circle, big data cluster. So. The, agenda for this is like the. Data management piece that you got to consider from all the data that is generated from, edge core and cloud and with. The Microsoft. Sequel 2019, products and Travis. Will walk through cycled. 2006. Our 2019, big data cluster, and. I'll, talk through from, whatever the performance, testing and scalability testing, of big data cluster, and, top, three use cases that you can consider for getting started with a big data and, we. Have a interesting couple of interesting demos are showing, BDC as well as the, high performance, sequel, server 2019. And, we'll. Have time for Q&A so. I'm sure by end of this session you will walk away with what, is the big data cluster and what are the use, cases I mean how do i how do I get started with some, of the use cases you have for your. Organization's. So. With that said. Monday's. Such as keynote he highlighted the importance, of edge computing, right so on that note I want to ask one big question so. As per Gartner. 2020. As per Gartner report what. Amount of what percentage of data that is going to be generated and, analyzed at the edge before, it moves to the core and cloud, any. Guesses. It's. 75 percent so, 75, percent of the data is going to be generated and, analyzed, at the edge so. That's why the edge computing, is going to be increasingly, important, for organizations who's, building the. Small city smart verticals, and smart homes so. For. Example. Chick-fil-a the, leading restaurant, chain they capture all the point of sales information keystrokes. From, the edge from. From, from. The keystrokes information, from the point, of sales information and, then map it with the smart kitchen sensors, and adjust. Their forecast initial and forecast demand so. The same way this, applies to the you know the cashier let's check out the, amount of products, that is in your shopping bag if it doesn't match us with the amount of other items, that you're scanned then immediately a lot should pop out right so all, these edge computing, is all more more about how quickly respond, to the data that is needs to be analyzed before these data goes to the core or cloud and it, is all as it relates to because it relates to the real. Life real time events and the.

Things That you process on the more, onto the the core is like the, data once it moves from the edge unto this you carry out a massive, analysis, of the data and build, interesting, insights, and patterns, and feed, that intelligence, what is generated from this core back, to this edge right so thereby make this edge more, of an intelligent edge devices and then. When moves to this cloud like because, of the economics, of scale you store this data even, I, mean, carry out this analysis even on a larger scale so. So. It's important, to consider as you move forward how do you architect your solutions, from for all the data that is getting processed at the edge core, and cloud. So. With that said like when you deal with the edge computing, so you don't need the servers to be you know you need the service to be very lightweight, compact. And can sit under the shelves right so, but. It should be powerful, powerful, enough to deliver a robust performance, so what I'm showing up here is a AC 350. Lenovo things system ad server losing a sequel server standard, edition. Delivering. A I, mean. We kind of scaled this performance, from a four core all the way up to 16 core as you see in the graph it, provides, a linear, scalability performance. From. As we scale from 4 code to 16 course and it Peaks around one point eight million hammer DB transactions, per minute so, at the same time it, delivers the as, far as the eye ops is concerned it's almost 385, ki ops so, that means it's. Almost like an entry level or a, a mid-range. Storage, RF performance delivering. Through an ad server itself so. This is an excellent solution for any of point-of-sales application or some, of the edge use cases that I referred below previously. So. Moving. From okay, I think this has got a lot of animation, okay, so. Moving. From edge to core so, we have built from. Lenovo built complete, depth and breadth of solutions, using the sequel server and, to interesting, solutions, that we announced for exclusively. For this ignite, conference is, one is a very, scalable solution, from address track HCI, so, where you can scale the sequel server and from a two node configuration all the way up to ten node configuration are you using the ISO track HCI and. For. A data warehouse we, have a solution, this is a Microsoft Certified solutions from. Less. Than ten terabyte all the way up to 200 terabyte and big, data solutions, what, we have from you, know lenovo is working on this big data solutions for several years we kind of had this solution with the clovis and Houghton works but, what is the game changer here is. Since. We have used to work with this big Dirac opportunities. Follows for. A long period of time some, of the customers used to ask how do I build my unified, data management strategy, using a structured, data and unstructured, data and, also, an independent, scale, the compute and storage because. The. Data that is getting generated in the internet not everything needs to be treated at the same time right like you know there are somewhat compute-intensive summer, storage intensive, so, and the third one is like as we move forward like everyone looking at a containerized, and the kubernetes enabled, platform, because they see that that's the way to move forward so to combine, all these three benefits, so. Microsoft. Has got a perfect. Answer for that that is a sequel server 2019, big data cluster -, and we, announced the solution for the Signet conference and to talk through that Wario Big Data cluster I'll pass it over to Thomas ok. Thank. You for surge ok, so. Yeah. You guys probably saw the announcement, that sequel server 2019. Went generally available this week so, it's been a couple years, early.

So Sequel server out we're super excited about it our, cost they're super excited about it and probably. The most significant. Thing that we've added in this release of sequel server is big data clusters, and as. You'll see in these next few slides here it really kind of redefines. A sequel server from, what you know it today right if you think about sequel server for 25 years it's. Been more or less a relational, database engine, it's rows and columns and with, big data clusters, as I'll show you today it really, is changing the game about what sequel server actually, is and, what it is capable of doing for you so. Let's start by covering off a few key principles about, what we've done in sequel server 2019. The first, is that we've. Invested. A lot in creating. Some data to Gration capabilities. So, everybody. Loves doing ETL right rid, of those building ETL pipelines, and maintaining those guys no nobody, loves that right. But. What. We need but we still need to integrate our data so if you think about it like there's kind of two ways of doing this one is we can do data integration, through data movement ETL, or we. Can integrate our data through a data virtualization, where we leave the data in place where, it is living. Currently and we, virtually represented inside, of sequel's that our applications, can query that data in sequel, and act, query times sequel will go get that data from the actual source where it's at so. In sequel Server 2016. We introduced, the poly based feature for the first time and that was how we enabled, you, to access. Data that was in a cloud era or Hortonworks or Azure blob storage. From. The context. Of a sequel server external, table so, to all the application, developers, or report authors and so on sitting on top of sequel server they, would see that external table just like any other table but what would happen is if when you queried that external table sequel. Server would go retrieve that data from. Those big data systems and bring it back as though that data was in sequel server but it wasn't it. Was actually just virtually represented thereby having an external table there representing. It but. We've extended this concept of poly base and data virtualization now, to incorporate. A number of other different data sources like Oracle Teradata, other, sequel, server instances, some of our sequel based cloud services, like after sequel database and data warehouse as well. As no sequel databases like MongoDB, and. All, through the MongoDB API, you can get into cosmos, TV as well and then, on Windows only for now and eventually on big data clusters and Linux you'll be able to use really any ODBC. Driver to, access any ODBC, compliant data, source and virtually. Represent that data inside of sequel server so this makes it very easy for you to integrate your data together across. Different data sources and if we have some time at the end I'll actually show you a demo of how this all works, so. This helps you more easily integrate, all of your data. One. Of the things that we found in, that implementation. Of poly base over cloud era and Hortonworks when. We initially, did that in sequel Server 2016, was that customers. Found that to be a difficult, integration, to make and, that was because at the time Big, Data ran on Linux and sequel. Server ran only on Windows and so. In terms of like trying to do passed through Active Directory authentication, that. Didn't work it, wasn't very fast when you're trying to access data in cloud era important works there's. Too many hops and things and so we. Said okay. We. Have sequel server on Linux now right, so I can sequel server 2017. We have sequel server running on Linux why. Don't we just go get SPARC and HDFS, the, key kind of foundational, building blocks of a, big data solution, they're open source Apache, foundation let's, go get those and put. Them in the box with sequel server so. That we don't have these big integration. Hops to go between our products, and something, like cloud era and, because. We have sequel to run Linux we can make the whole thing be, Linux based and. While we're at it because, everybody's. Doing kubernetes in containers, these days let's just containerize, the whole thing and run it all in kubernetes so it's elastically, scalable, easy to deploy easy, to update and so, that's kind of how the solution works and so the. Thing that's kind of radically, different, about Big Data clusters, in sequel server 2019. Is that we have SPARC and HDFS in the box that, enables you now to scale. Out the, storage capacity and, compute, of sequel. Server, much. Much, bigger than what you would normally do with the traditional sequel, server because. You have HDFS, there you can store data up to a thousand, times greater, in volume, than what you would typically do in a sequel store today today people.

Might Put maybe tens of terabytes of, data typically, into a sequel data warehouse for example a sequel server data warehouse, now, you can go up into the tens or hundreds of petabytes, of data using, HDFS, as your distributed, store of that data and you can store unstructured, data in HDFS and, then you have SPARC there to, do in memory distributed. Compute, across all of that data that sits in HDFS, that. Gives you another dimension, of scale-out compute, in addition, to the sequel server engine itself and then. Just to make X things extra interesting, we. Put a sequel server instance, on every, HDFS, data node so. Now when you define an external, table over. Your data Lake in the sequel server master, instance up there and you. Query that external table we actually push down that query to. The sequel server instances, co-located. On the data nodes where that data is act and that, sequel server instance, will open up and read, and write to, those HDFS, native file formats, like park' files or CSV files and bring. That data back up to the master instance, so. Now there's no sort, of need to integrate, with cloud era in some way like we did in sequel Story 2016, everything is just native, to, sequel, server. Now. To. Speed up query performance we. Introduced, a layer called a data pool and a. Data pool is a set, of sequel instances, that are provisioned, as containers on kubernetes, and they. Are used as a distributed. Store of data coming. From some external data, source so. Let's say that you have an Oracle over here for example and. This Oracle. Database is an operational, database you, don't want to impact the performance of it or maybe, the network latency, between your sequel server instance in your Oracle instance is kind. Of too too, good or, too high to be useful in terms of query performance. And. So you want, to kind of offload, that query from Oracle and bring that data local the sequel to your queries are super fast so. You can define an external table over Oracle define. An external table over the data pool and move, the data from Oracle into the data pool by using nothing but T sequel queries and when. You move that data into the data pool it will automatically. Distribute. That data across all of the data pool instances. So it gets load balanced across n number of data pool instances, and when. We put that data into the data pool will automatically. Put columnstore indexes, on top of it for, super fast query performance up to a hundred times faster than without a column store index and will compress, the data on disk by up to 10x and. Now. When you run a query instead, of going to Oracle to get the data at query time we'll, go to the data pool and when we go to the data pool will, execute the filter and local aggregations, parts of the query in. Parallel, across however, many data pool instances, you have and then send that data back up to the master instance, so.

This Is a new scale out architecture, for. Storing. Data in the sequel instances, and for. Doing distributed, in parallel, sequel queries across that data. So. Again this kind of just completely redefines, the way you think about sequel. Then. Because, we just really want our queries to be super super fast you, can also have a compute, pull and, compute, pool as a set of sequel instances, that are again provisioned, as containers, on kubernetes and these. Sequences. Don't store any data they're, there just for you guessed it compute, and. So. What happens now if you have a computer either. The storage pool where you have the data in HDFS or over the data pool is well. First push down the query to, either the, sequel, instances, down here, or to, the sequel instances and the data pool wherever that data is at and, the. Filters, and local aggregations, will happen in parallel across all of those and then, the compute instance is up there they will, ingest. The data in parallel, from each of those data sources so. Think of it as like multiple straws just sucking, in data up into those computable, instances, and then. Once that data's been ingested, into the compute layer then, those compute instances, will talk to each other to do cross, partition. Aggregations. And sorting, of that data before, returning, the results back up to the master instance. So, this offloads that type of query execution, from the master so, you can have your master be doing old TP type activities, at full, scale and, meanwhile, in the background, analytics. Type queries it can be done at scale in a new distributed. Compute scale, out architecture, that. Is new to sequel, server so. Hopefully. As you can see here this is like a completely, new sequel server in many ways right you still have sequel server you, can use it to do LTP, at scale we'll show you a really cool demo here in a minute about that you. Can use sequel server for data warehousing and now, you can use sequel server for, your big data and analytics now. I, want to talk about one more thing here which is how big data clusters provides, you a complete, AI platform. And. What I mean by that is that it covers everything, from the ingestion of the data where. You can either either use sequel server integration services. Or Sparx three mean that's built-in you. Can use the web HDFS, endpoint, to ingest, data into HDFS that way and you. Can then have a choice of how you store the data you can put the data into the sequel server master instance just like you put data into sequel server no. Difference there all of your applications, just continue, to work you. Can put the data into HDFS especially. If it's high volume, or unstructured data or. You. Can put the data, into those data pools where you distribute, the data across all of those sequel instances. Once. You've got your data there then, you can use either SPARC or sequel, to, prepare, your data as, SPARC is especially good at doing something like this because it can do an in-memory. Distributed. To compute over that data to clean up your data or do, some aggregations. And you can set it up to do SPARC jobs on a periodic basis to do that data preparation type, activities. Once. Your data has been prepared, then the next step in the process to actually start, to train your machine learning models, now. With big data clusters, you have that choice you, can either use the machine, learning services that are built in to the sequel server master, instance where you can run our Python.

Or Now Java in 2019, to, train your machine learning models in the database so you never have to take your data out of the database to go train it on some machine learning server you just train it on the, database you keep it in that secure boundary of sequel server and leverage. All of the underlying, horsepower. That's typically there for a sequel server in terms of memory and and cores. To train that machine learning model or, you. Can use SPARC to, execute, you, machine learning trainings, and. You can use the machine, learning, algorithms. The and packages, that we provide out of the box such as tensorflow and cafe scikit-learn. In either, sequel, server ml services, or in spark right. And then. Once you have your machine learning models trained then, you have a couple of different options about how you operationalize. Those models, one. Of the easiest ways to operationalize. A model, is to, put. Some R or Python, or Java in, a sequel, stored procedure and, that. Are Python, or Java can just, retrieve. A model, out of the database where it's stored as a vibe bar binary object and then, have, some input parameters, come into that stored procedure and score those input parameters, against, the model using our Python or Java and then, return back the scored, results, as part. Of the the result set that comes back from that stored procedure or if, you're doing a sort of a data ingestion, task and you want to score, the data on the way into the database you could have a stored procedure that's doing an insert basically, and as, part of that insert, you're doing some scoring against the model and you insert the scored value at the same time you're inserting the data those. Are just a few examples, another. Example though is how you can do this is in spark so, you could have a spark job that. Retrieves, the model from let's say where you're storing in HDFS, and scores. Some of the data against, the spark model using our Python. Or Scala inside a spark and, then lastly for operationalizing. Your model is we provide a tool kit that you can run in either at your data studio or vs code that. Allows you to take an application that, you've written that does the model scoring, and automatically. Package, up that application, exposed. It as a rest endpoint, and submit. It and we will take care of the process of container, izing, that application, and provision. In that container on the big data cluster and managing, the lifetime, of that and the, access, to it through the integrated role-based security model, so, that your operationalized.

Models, Sit right next to the data upon which those models were trained in one system, so hopefully. This is just kind of a quick overview of big, data clusters, and you, see how we kind of cover this spectrum. Of doing data integration, with poly base and all the new capabilities there that maybe integrate, your data across different data sources and that's. Really important when you're doing analytics, because you oftentimes need to bring. Together multiple, different, data sets and feed that into your machine learning model training and secondly. As it provides a shared data lake upon, which you can run either spark jobs or sequel, queries and, lastly. Is it provides a complete. AI platform, for doing everything from ingestion of the data to storing, it preparing. Your data model. Training, and model operationalization. So. With that I'll hand it back over to Prasad, to pick up how we've been doing our partnership, around big data clusters. Thanks. Travis. So. Travis, explained the. Big data cluster is built, on the kubernetes platform, so, we for, testing big data cluster we used a half fractal, Onofre, configuration, so this consisted, of one, kubernetes, master, dedicated. To it and it's. Kubernetes. Storage nodes out of that 8 storage nodes so. We let, have, all the eight, nodes. Being used for the storage pool the goal was to provide a scalable, platform. I mean scalable, storage platform, using the big data cluster and we used a four compute pool and for. Caching as the Travis explained we use the for storage for, data pools and we, got a one cycle, master. And we, had this one spark head head. Node for executing all the spark jobs. So. Some. Of the configuration, details of this thing is like beats so, we used SR, 650, Lenovo thing system servers for nine service of that and each, of the servers is loaded with 80 160. Intel. Platinum, processors. And each, of the service is loaded with 768, gig of memory, and. For. As far as the storage is concerned like they use the Intel nvme, P. 4600. On. The software side like so, for BDC was powered, on with the SPARC 2.4, 2 engine. And then. The. Kubernetes 1.15. And, this. Is on up and running on the the. Ubuntu, 1604. So. For the testing, they used that, PTDS. In case if you're not familiar with the TPC Diaz they'd say decision. Support system its symbolize the retail buying. Experience, of products. Through the. Stores catalog. And them right, so what it involves is a complex, SQL. Queries, much. More than. What if you have used to TPC H which is twenty two queries TPC, Diaz is 99 complex queries, it has multiple joints, a kilo lines of code and then. So. Use. The spark, SQL, as an engine to evaluate, the BDC performance, the goal was sequel. Server engine is being proven for many many years now the. Microsoft, introduced the. BDC big data cluster and we. Want to evaluate the spark SQL performance on the BDC. So. Here. Is the performance results right but. Performance, and scalability results. So, as we scaled a data set from 10 terabyte, delivering, 56, billion rows and scaling. Up to 100 terabyte up to, five delivering, up to fifty sixteen billion rows we see a uniform. Scalability, in performance, so. And on, the. Data load when we loaded this data set it took around 410, terabyte around three point six nine hours and when you increased, up to 200, terabyte it is to thirty eight point thirty, eight point two eight hours so, what there is no doubt of this so. The data load is a write intensive, workload so that means. Write. Intensive, workload it delivered uniforms, scalability. Performance, for a write intensive, workload on what, it proves is the HDFS, layers sitting, on the storage pole provided, a very linear performance, benefit right so you can scale this your, ID number, of storage, pools you get a linear boom benefit and performance, right, and on the compute, the.

Spark Sq, the spark engine which is running at the BDC is providing. It proved that it provides a linear scalability in performance, as a scale from 10 terabyte 2 all the way up to hundred terabyte. So. Not, only that so, there is another metric we also measured like you know the what about the query elapsed a time does it provide the uniform. Consistent. Experience, whether. When we run this 99. Complex, queries for a 10 terabyte hundred terabyte as, see I want to point out one query 24, like, you know it delivered for a 10 terabyte around 1300, seconds but when we go up to all the way up 200 terabyte it is 1313. Thousands so, there is no variation. In the in terms of the spike for different, queries when we are executing like all the 99, queries for a 10 terabyte, or 100 terabyte deliver, a uniform, consistent, and the. Performance, results so. Some of the optimization, we carried, out was one. Just, like the sequel server we got to collect the the, statistics, here so that's the, histograms being collected and we. Use the CBO that's cost based optimization and, the, spark memory was set up at a 65, Greek and. Other, the spot executors, memory was set up at 65 gig and we provided a 6 gig of as a overhead and each. Of this part had a executors, course for course assigned for this and then, total around. 80 course being allocated. For this so, this is the optimization, in the spark layer and also, the kubernetes, and the Dockers the which is typically. Gets loaded in the where opt, layer like in available, air so we made move that into, to. The enemy, may drive so that we, see a significant. I go performance, benefit moving that that. Those folders, into the nvme. Storage layer. So. Now, switching. From. Workload. Analysis to system analysis, right so this is from. Lenovo data center we do care about how linear systems perform as we scale this data, set from you, know 10 terabyte all the way up to hundred terabyte as we see this disk, utilization, CP utilization, and network utilization that, consistently. Increases, as we increase the data set so, that's proves. That the system utilization also, provides an excellent scalability, in terms of as we increase the data set. So. With, all these understandings, what we arrive at with is like you know to get started for, a big data clusters. So. We came up with the starter. Rack configuration, which. Will get your own Phi servers and all, these system, configurations, is completely, flexible based, on your use cases I'll talk about some of the use cases how. We to arrive at a configuration, and then, a standard, environment, which is a typically what we sell most, of the time $4, for enterprise customers is a start standard. Environment which is a half rack environment, and then, go, to the advanced, environment which is a full, rack environment, which where we provided 18 servers this is more for what. Travis, spoke about an end to end where you have a Apache spark and spur machine, learning you're streaming this data in real time organic, example. That we have dirt one customer is essentially a straight. That example how do we arrive at that a full, track configuration. So. With that said like some of the high level use cases I think is like so. And, accelerating. Any of the business intelligence applications. Right so it's a no-brainer the sequel server is doing this for many. Long long period of time so there is nothing much new. Abode but what, it also helps, is since the big data cluster is built on this company's platform so it can elastically, scale the new concept, you probably you're kind of hearing is that CDW. That's a cloud data warehousing since, it's built on that kubernetes, platform, you can elastically, scale your data as your dataset, grows so if, you're looking into the CDW, this could be a one other use case but, some of the meat of the matter where it will gates lot of benefit, us benefit for you guys is like it's on the data Lex so as it, integrates, the structured data unstructured, data because, of providing, this HDFS, on the spark together nice so you can think about building. The data Lex if you're looking, into building a data Lex this is an excellent solution but, if you are looking at no I I will get started one step at a time now, I don't build a data like right away I will offload certain portion of the data from my classical, a data, warehouse appliance, and mood, appliance, or a system.

Into. Your a, big, data environment you. Can take care of that too using that the, storage poles right because it's provided a scalable Hadoop. File system so. HDFS. File system and streaming, analytics like, using if you want to look, into you know integrating all your IOT devices building, the real time intelligence. So, that you can take care of through streaming. Analytics, since it's provides. I mean suppose the Kafka and as well as the SPARC streaming you can build that streaming, analytics and if you are looking at building any recommendation. Engines for your products, or looking at. You know new business model like a fraud identification. Or a fraud detection so, that you can take care of through, using. The big, data cluster. So. Here is one example that I wanted to illustrate like, here is a recently. We engaged I telecom customer, one of the u.s. carrier that when, a perhaps, one of you guys will be using here is. A CD as called data records their interest was they're storing lots of this data in their data. Whereas appliance and they. See they've realized that they are not utilizing. The data warehouse appliance what it's supposed to do in terms of providing, the rich functionality in terms of running the complex queries building the Reapers and everything so they had this thing like you know so they had excellent data ingestion framework, but where were they were missing is like because they were used to show all this data into, the central repository of data warehouse but, not prioritizing. What data's to be in a story in data warehouse and I mean. You know since they were as the data set grows they, are able to they were already maxing out the storage capacity of that so, what we recommended, is like now you, look into storing, all the high-value data which is of business, importance for you into, a cycle. Server master database like you know you can take care of that and offload, some of the data which is for a long term retention into, the storage pool of Big, Data cluster so thereby when you when. They run a monthly. Billing cycle, so you will fire a same query and the, t-sql go, and fetch the data from both from the traditional data warehouse as well as a big directly I mean the storage pool and HDFS layer so you can integrate, this data seamlessly and provide this so. What we recommended, here is like you know a. Sorry. So. Here is a some, some. Sizing example I wanted to illustrate like, let's say they have this CDR I cut the call data records growing at 10 terabyte thing and they're. Transforming, and staging this another 10 terabyte and they, have a curating, this stale data at 4 terabyte so it will add up to 24, terabyte off for, a year 2018, now, when they add up to the typically, when you size. These, designs. You plan for three years ahead of time right so then it'll be you know when you add it up it will come to around 70 terabyte so, then. For. Sizing. Complete the ooh look at this one you got two for consider for like how much amount of data what, is my compression, what is the replication, factor, and what. Is my work area and warhead. So all this if you consider that the total you will come around into 124, terabytes, I did all this calculation over here you will sit on this left side so, and, for, sr6, since it is we are kind of providing, the storage more of a a you.

Know What is called a low-cost think. For them to offload, low-priority data there so we use the standard, hard disk drives so fourteen, hard disk drives multiply, before 256. Terabyte and divided, by 124, terabyte, of requirement, and what will land up is a three storage nodes three storage pools that's what we are showing up here so, since you need a three compute. Pools because it has to fetch these computers, will go and fetch the data from the storage pools you need a three compute pole and the, master instance serving all the the, high priority data so. This one example I want. To take this to a next example, where, here, is a banking customer so their requirement. Was to build very a, a. Complex. Workload. Their, importance is like it said these these are the reports that is needs to be built for the business execs right like so they but. The requirement. There is the lot of data is some, data stored in sickle server some data stored in Hadoop file system somewhere, in other. Databases like Oracle and Terra data so how do you build a cluster configuration for, such requirement, using BDC right so, and, 30 to 40 percent of their data, has been frequently accessed, and 40 to 50 percent of the column is being frequently accessed as well and it also involves. Some amount of complex, SPARC. Workload, so what would be arrived at E is like you know we wanted to provide a very scalable storage, pool that's why we ended up converting, into a three main storage pools and we. Need three compute, pools to fetch the data from the storage pool of the HDFS, layer and three, compute pools to go and spin up and get the data from Oracle, Altera data so, that means you have six compute pol since, this AB is a complex. Workload. Where you for. A business priority so we wanted a a chair design so that you. Know I mean your this, customer will not complain that ok I'll lose my master instance what do i do right so that's why we had this, three, computer. Master, this, one secret several instances, so. I'm since, we wanted to catch this result so that by next time they when they fired the query it need not to go and fetch this from storage pool or. From the external tables. Like Oracle Altera data so, we'd allocated, three storage pools so, if you sum up all these things we will allow up to the half rack configuration, which is in mind rack sorry. Nine node half rack configuration. So. Switching. To the another example, here in end-to-end scenario, so. I'll use the same illustration. What Travis just spoke, about so, here, what you are trying to do is like for example. You. Know when, you're building anything, like a a fried, enta fication for any of the financial institutions. Right so what they're looking at is like streaming, this data from, the. Credit-card devices, right in real time so, these, are the real-time messages, generated let's. Imagine that they're generating 500 k messages per second which translates, to 20 terabyte of data per day and your, goal is to build a machine learning more what, will it, has to look into your purchase patterns, let's say your purchase patterns for 10, years or 5 years of period of time and, also, the, purchase, patterns, like in turn in terms of whether the, distance between your previous transaction, to the next transaction right, like something you purchased in San Frisco the next in within 30 minutes your purchasing the transaction, is showed up as in New York so that within, 30 minutes of time so, much of a distance that's a if not, a right pattern right so it has to flock that partridge flock, that anomaly, so. So, what it has to do is let's imagine that you have 1 petabyte of data that, needs to be trained. Using, the logistic, regression, or a linear regression so. I. Mean, we have used also random forests so, and, it, involves, both bad jobs as well as streaming, jobs so, how do we arrive at a configuration, so what we are providing here is a full rack configuration, which provides an end-to-end platform, so we provided a 15, storage pools and this. Three storage pools is dedicated, for a calf car and Mimi where the data ingestion happening, in real time as the, the data is, being, ingested real-time from this edge devices or any of the these credit-card devices, it, be streamed right tired right there and you, need this high-performance nvme. So that's why we provided a cuff for nvme, storage device there and, you store this data in, a long-term retention because all your historical, purchase patterns, like you know if it is one petabyte of data so. You need that much, amount of time the. Data storage so that is where we provided rest of the storage, pool and the.

Compute, Pole like you need we allocated six compute pole for all the cough cough cough processing, and six for spark. Machine learning training for using. Logistic regression, or random forests and. We. Stored this provided. A four data pool in case like you know immediately caching, these results, if there is an anomaly detection you, can go and plug this as a fraudulent, transaction. So thereby you'll not run into any you. Know fraud that that's how we'll go, and build. A fluid identification, and this right so. With, this what. We kind of arrived at is like three with these. Three, examples. What, we kind of arrived at is like how do we size the big data cluster right like you know so, what. We recommend here, is used to four to sixteen course per part right, and some, of the, jobs. You need a much, higher number of course like if you are using a spot machine learning spark SQL, or sequel master, instance and the same is true for the memory as well like you know so you got to use the. Spark. SQL, and, sequel, server master you got to increase the higher memory and, certain. Pools has to have a dedicated pass don't mix up, like. You know a a complex, a storage, pool with, also data pool and a computable, so, you got to balance that and as, far as the storage requirement, is concerned like, for a storage. Pool which, which. Is like you know you typically, account for three years of data and what, is my HDFS, replication, factor divided, by what is my work where added, with the work or work data overhead, and divided, by the data compression typically, we account for 3x, because, the bit based on that huge. Number of customer engagement this is the formula that be arrived at. So. Pretty, much I spoke about all these configuration. Half rack and full, track configuration the, two things that I need to highlight here is one. Is. We provide, something called a system management node this is something, for a system management that, we call it as an exclave, like you know so that we are combining with on the same the. Kubernetes master, and then. Though. We used, a 40 gig switch but, it is completely flexible based, on your what kind of workload you, execute so we provide from 10 gig all the way up to 100 gigs which the 100 kick switch recent, some of the customers they want to use this as a aggregator.

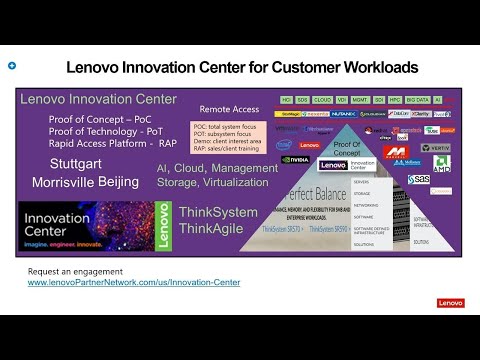

That Means you have one rack another rack and you want to aggregate the data from, top of these two racks unto the 100 Gig switch so thereby they, can aggregate the data and present, into the building. The reports and everything right so that's where we see, that 100 gigs which will be quite useful and, obviously. This, is a big data cluster which is all we are talking about all the data and everything be recommend, using a redundant, power supplies incomplete rack. So. In, addition to that the benefit of this things is we provide this as a complete, rack. Stacked, cable, and. We only into. Maybe I'll discuss with Travis like where we to have a BDC, loaded, preloaded, into this thereby you can be up and running in your big data cluster, in a shorter period of time so that's where we are looking, into this and. So. Here is something like a rubber meets the road right like in the in terms of Microsoft. We are also working with a Microsoft, on a sequel, a jociel, DBH so, what it does is completely, complements, with the sequel big data cluster, so, that means whatever the data which is getting generated from the IOT, edge divides, us they'll be streamed right through, the edge gateways, to the sequel DB edge and here. It has got a lightweight. Asacol, engine and a spark ml engine so on any of these. Data. Which gets tours in the DB H and this, data is number. Of H devices, will be streamed real time into the big data cluster, and in, the big data cluster, you build this machine learning model right based on the patterns, this the, intelligence, that is getting built. Ok, so it will be fed back to this hydro, sequel DB edge thereby. So, the DB h can, respond, to if there is a hiker in a spike in temperature, spike in pressure so, any of the men manufacturing. We can take care of this, okay, I think we running, short of time I'll quickly say that yeah so this is an end-to-end topology, should be looking into as you move forward from connecting from edge, core and cloud. So, some of the benchmarks that we are kind of working with for, a long period of time so we have some industry. EPC. Benchmarks, that is established over a period of time that's. What it illustrates. Not. Only on the Big Data we also done benchmarks, on the IOT. So. I want to leave it with this life saying that like you know so if you have a use cases bring. This workload to us like we have this innovation center whether he wanted to build a proof of concept or, a proof of technology with a big data cluster we, can make this happen and maybe we have this worldwide innovation, centers whether, in, North America we got in Morris, well if, in amia we got in Stuttgart and for, any of the things we got in Beijing so with.

That Sand I'll I'll. Wrap my presentation. And time to switch over to demo okay. You, know do. You want to do okay. So, I'll switch to. So. I got a quick short demo on the. Power of sequel, server 2019. Engine, on. Complimenting. I mean me complementing, the a Lenovo eight circuit system SR 950, what, we are kind of illustrating, here is. Fetching. One, trillion, records not billion one trillion, records in. 100. Seconds so, what it shows here is the power of sickled, server 2019, complementing. The cicles the, lenovo s on. 958. Socket system you see here at 2:24, course, 100%. Maxed, out and. So. There, is that the data that is stored here, is 150. Terabyte of data in, a table. Called line item and. Yeah. So this is the complex query one of the complex very you you can see imagine, like how many amount, of data. Aggregation, happening, the summing, aggregating. Counting, everything. So this is the line item table I was talking about it has got a one trillion, rows so, one trillion rows being processed and the. Complete report is being built under, 100 second period, so, that's the so these, are the. Video that we captured. So. This. Processing. A billion. Rows a second, is going to think of it that way doing. All those aggregations, of average. Just sum all. These kinds of things in pegging all 224. Cores at the same time yeah it's been 100 percent max, dawg. It's. Each, one complementing both, of them complementing right so, sickle. Server engine needs a faster processor, and. Which. Completely, new man enable like it's eight socket right latina so you have a an uma enabled engine which will which. The, data which is from the sequel server provide, that kind of processing. Pro bar and the memory so. Okay okay, so I'll just quickly show you a demo, of big data clusters, I'm using Azure data studio here connected to a big data cluster and I'm just going to create a demo, database and then we'll walk through some. Data virtualization, scenarios. Here. Ya. Go. Let's. Try this. Yeah. I lost my connection. Okay. Let's try that again. Okay. So we've got a demo database now I'm gonna just create, a encryption.

Key To building, encrypt my credentials, with now. We'll create a. Okay. User. Error all, right. What. Am I doing wrong here. Mmm. Oh it. Switched my connection. Back over to the wrong thing again. Yeah. All. Right. There. We go okay, so now we've got our external, data source pointing, at an Oracle server that I have in this environment using the credentials I just created now we'll create an external table, so, this just maps to the schema of that table sitting over there in Oracle, and tells us where that table is at in the Oracle server and, now. That can go and I can see that I have a new department, table in my sis tables, right now I can just select from that table so this looks just like any other table inside, of sequel server except. That this is just a virtual representation of, that table sitting over there in Oracle at the time that I queried this table inside of sequel server sequel servers actually reaching, out to, Oracle, to get that data out of the Oracle database. Now. Let's also take a look here I've got my Big Data cluster I've got my sequel, server master instance with all the databases here and then right here inside about your data studio I can also see the HDFS, that's deployed in that Big Data cluster and I. Can see some of the files that are sitting there so I can see that I for example rectory. Here and there's. A couple CSV. Files in here we can just kind of quickly see what, one of these files looks like the very basic CSV file that captures some sales right so how could I query this data, from. HDFS, without loading this data into the, sequel server master, instance that would sort of be the typical pattern but. I don't really want to do it that way what I would do is I would run through this create external table wizard, and this will walk you through the whole process in, sort of a wizard type experience, but. I'll also show you how we can do this using, T sequel here as well so. We'll, use this notebook here. Okay. And we'll create an external data source for the sequel storage pool I'll, create an external file format, that tells sequel how to read these files that this is a semi or a comma separated file it's, got a header row in it then. We create an external table which defines, the different columns for, the data tells. Sequel, server where those data files are at by prior to providing a path to those files here in the slash demo slash sales data directory and, you can see that I'm creating this over the directory, here and not a particularly. Here. They'll all be treated as one unified. Table, across all of those files and. Now that I've created that external table over, those files sitting down there in HDFS, I can do a query over that. Data and retrieve that data back by. Just, querying that external, table inside, of the sequel server master instance I can. Also do aggregations. Over that data as well and I can do filter, and predicate, and so on as well and, kind of push down these types of, tasks. To the storage pool sequel instances, and. Then the other thing I can do is I can actually combine this, data together so, I, have. This combined, query here that, uses, the department's, table that we created, that's a external, table over Oracle and combines it together with a sales data table, which is the table over the data piles sitting in HDFS, and I, can run this query and the, sequel server a distributed, query optimizer, is smart enough to push the.

Query, To the Oracle side first to, filter, on those, departments, which. Have. Marketing. As the name and, retrieve. Back just the department IDs that match marketing, and then send that department, ID down to the storage pool to filter the results out of the the files down in the storage pool so smart enough to figure, out the order of operations, that, will improve. The query performance the best by a minimizing. Data movement for example so. Becomes very easy to combine data together just using standard T sequel query syntax like, an inner join here to, join data across different data sets into a unified, data set so. I think that's probably all the time we have for today to show some demos but we're available, for a Q&A if you guys want to come up here or we probably have a few minutes where you can take some from the audience as well but we're. Keio over time on session so feel. Free to come, up or ask questions but thank you guys for coming by. Oh, we. Have a giveaway yeah. Oh nice, so. You want to pick oh I, get to pick all right what an honor. Okay. Five. Zero, zero. Seven. Seven, three. Right. Here all right she. Took a picture so congratulations. What. Do they win we. Didn't say what is this. USB. Laptop, power bank oh cool. I could. Use that my, Mac books getting a little old with the battery. I. Am. Actually I am.

2020-01-19 20:32